AI Search is a continually evolving space. LLMs are continually improving with new versions being deployed regularly, and AI Overviews are already in place in more than 200 countries and more than 40 languages. Google’s AI Mode has officially launched in the US, promising to expand what AI Overviews can do with more advanced reasoning, thinking, and multimodal capabilities to help with the toughest questions. The use of vector models is enabling semantic search to understand what is being asked, not just how it’s phrased (read our research on Semantic Relevance).

With all of this in mind, our AI research and development team is examining how AI Search is evolving, and how we can help Lumar users understand the performance of their site and content, and optimize it for optimal results—broadening from traditional Search Engine Optimization (SEO) to Generative Engine Optimization (GEO).

The good news is that GEO also uses a lot of the foundational parts of SEO, like crawling and indexing. As John Mueller said in Search Central Live NYC in March 2025:

“All of the work that you all have been putting in to make it easier for search engines to crawl and index your content, all of that will remain relevant.”

Lumar has several reports that will help you with GEO, which we’ve outlined below (along with a few that are coming soon). And as I said above, our team is looking at additional reporting and analysis (including a dedicated GEO section) that can help, so watch this space!

View our recent webinar on technical SEO in the age of AI search

View the webinar

Crawlability Reports

These Lumar reports identify whether AI bots are blocked from crawling your pages. You can use these reports to verify that valuable content is not blocked to AI:

- ChatGPT Blocked. Pages with a 200 response that are blocked in robots.txt for the GPTBot or ChatGPT-User user-agent tokens. This prevents ChatGPT from training on or referencing your content in responses.

- Google AI Blocked. Pages with a 200 response that are blocked in robots.txt for the Google-Extended user-agent token. These pages are blocked from Google’s AI systems (Bard/SGE) from accessing content for generative responses.

- Google SGE Blocked. Pages with a 200 response that are specifically blocked in robots.txt to prevent Google Search Generative Experience from using your content.

- Bing AI Blocked. Pages with a 200 response that are blocked in robots.txt for the Bingbot or MSNBot user-agent tokens. Prevents Bing AI/Copilot from accessing and citing your content.

- Common Crawl Blocked. Pages with a 200 response where the URL is blocked in robots.txt for Common Crawl’s CCBot user-agent token. Common Crawl data feeds many AI training databases, so blocking limits AI model training exposure.

- AI Bot Blocked (coming soon). Pages that are blocked to AI bots so they cannot access content.

- Perplexity Blocked (coming soon). Pages blocked to Perplexity AI search engine, preventing content inclusion in AI responses.

- Pages with Meta Nosnippet (coming soon). These pages prevent AI systems from using page content in generated snippets and responses.

- Pages with HTML data-nosnippet (coming soon). Pages with an HTML-level directive that blocks specific content sections from AI snippet generation.

- Pages with Header Nosnippet (coming soon). Pages with an HTML header directive that prevents AI systems from creating snippets from page content.

Availability Reports

These Lumar reports identify whether AI bots can access your pages. If URLs return 4xx or 5xx errors, are blocked by robots.txt, or trapped in redirect loops, AI systems won’t be able to crawl them, making discovery impossible.

Use the following Lumar reports to identify issues and make sure all your content is accessible to AI bots:

- Broken Pages (4xx Errors). URLs that return a 400, 404, or 410 status code indicate a page could not be returned by the server because it doesn’t exist. AI bots cannot access or index broken pages, so this is a fundamental availability issue.

- 5xx Errors. URLs that return any HTTP status code in the range 500 to 599 indicate a page is temporarily unavailable and may be removed from the search engine’s index. Server errors prevent AI bots crawling content.

- Failed URLs. URLs that did not return a response within Lumar’s timeout period. This shows potential temporary issues due to poor server performance, or a permanent issue. Failed URLs cannot be processed by AI systems for content understanding.

- Redirect Loops. URLs with redirect chains that redirect back to themselves, creating an indefinite redirection loop, which prevents AI bots from reaching actual content.

- Redirect Chains. URLs redirecting to another URL that is also a redirect, resulting in a redirect chain. Long redirect chains can cause AI bots to abandon crawling before reaching content.

- 3xx. Minor redirect issues that may impact AI bot efficiency.

- JavaScript Redirects. Pages that redirect to another URL using JavaScript.

- Internal Redirects Found in Web Crawl. URLs that were found in the web crawl, that redirect to another URL with a hostname that is considered internal, based on the domain scope configured in the project settings.

Renderability Reports

AI systems may rely on rendered (JavaScript-executed) content. If important elements only appear post-render, but bots can’t access them, you risk your content not being visible to AI. The following reports help you identify potential issues, so they can be addressed:

- Rendered Link Count Mismatch. Pages with a difference between the number of links found in the rendered DOM and the raw HTML source. AI bots need a consistent link structure between raw and rendered content for proper understanding.

- Rendered Canonical Link Mismatch. Pages with canonical tag URLs in the rendered HTML that does not match the canonical tag URL found in the static HTML. Inconsistencies can confuse AI systems about which content version to reference.

- Rendered Word Count Mismatch. Pages with a word count difference between the static HTML and the rendered HTML. Content discrepancies between raw and rendered versions affect AI content understanding.

Indexability Reports

Even if a page is crawlable, it may not appear in AI results if it’s not indexed or lacks visibility in search. The following Lumar reports identify pages that are ignored by search engines or missing from SERPs. Use these to prioritize fixes that improve discoverability:

- Non-Indexable Pages. Pages that return a 200 status but are prevented from being indexed via a noindex or canonical tag URL that doesn’t match the page’s URL. Non-indexable pages cannot be included in AI training data or search responses.

- Canonicalized Pages. Pages with a URL that does not match the canonical URL found in the HTML canonical tag or X-Robots-Tag response header. Canonical tags help AI systems understand preferred content versions.

- Noindex Pages. Pages that cannot be indexed because they contain a robots noindex directive in the robots meta tag, robots.txt file or X-Robots-Tag in the header, preventing AI systems from including pages in responses.

- Disallowed Pages. All URLs included in the crawl that were disallowed in the robots.txt, blocking AI bot access to content.

- unavailable_after Scheduled Pages. Pages with a 200 status code and an unavailable_after robots directive in a meta tag or response header, where the date specified is in the future. Future unavailability may impact long-term AI content freshness.

- unavailable_after Non-Indexable Pages. Pages with a 200 status code and an unavailable_after robots directive in a meta tag or response header, where the date specified is in the past. Expired content should not be referenced by AI systems in current responses.

- Pages with AI Bot Hits (coming soon). Showing pages where AI bots are successfully accessing content.

- Pages without AI Bot Hits (coming soon). Showing pages not being discovered or accessed by AI bots.

- Pages with GPTBot Hits (coming soon). Pages available to ChatGPT for training and responses.

- Pages with Anthropic-ai Bot Hits (coming soon). Pages available to Claude for training and responses.

- Pages with Google-Extended Bot Hits (coming soon). Pages available to Google’s AI systems.

- Pages with Bingbot Bot Hits (coming soon). Pages available to Bing AI systems for responses.

- Pages with PerplexityBot Bot Hits (coming soon). Pages available to Perplexity AI for search responses.

Page Content Reports

Page content is crucial for AI systems to understand topics and context, so any issues in areas like titles and descriptions can prevent AI systems from understanding, and therefore using, your content. The following reports help you identify content issues and make relevant improvements:

- Missing Titles. Indexable pages with a blank or missing HTML title tags. Titles are crucial for AI systems to understand page topics and context.

- Short Titles. Indexable pages with a title tag less 10 characters*. Short titles may not provide enough context for AI content understanding.

- Missing Descriptions. Indexable pages without a description tag. Descriptions help AI systems understand page content and generate relevant responses.

- Short Descriptions. Pages with a description tag less than 50 characters*. Brief descriptions may lack sufficient context for AI content comprehension.

- Thin Pages. Indexable pages with content of less than 3,072 bytes* (thin page threshold), but more than 512 bytes* (empty page threshold). Thin content provides limited value for AI training and response generation.

- Max Content Size. Pages with content of more than 51,200 bytes*. Very large content may be truncated by AI systems with token limits.

- Max HTML Size. Pages that exceed 204,800 bytes*. Large HTML files may impact AI bot crawling efficiency.

- Missing H1 Tags. Pages without any H1 tags. H1 tags help AI systems understand page hierarchy and main topics.

*These values can be changed in Lumar’s Advanced Settings if required.

Bot Behavior & Crawl Budget Reports

Insights into bot behavior show how often AI bots hit your pages and which pages are ignored. Pages with no hits or low frequency may be under-prioritized by AI crawlers, especially if they aren’t in sitemaps or are disallowed. The following reports help you assess crawl efficiency and make improvements:

- AI Discoverability Reporting. Understand the proportion of requests coming from Google vs AI bots.

- AI Bot Breakdown Reporting. Understand the breakdown of which AI bots are hitting the site most often.

- AI Bot Requests. See when AI bots are hitting the site to understand spikes and dips in requests.

- Top Pages By Bot. Discover and sort by which pages are getting the most requests from AI bots, to understand if the pages you want to be discovered are being discovered.

- AI Bot Hits by Response Code. Understand if the pages being found by AI bots are actually available. Logs are grouped by request over time to see if there are any trends in which pages are being hit by AI bots.

Structured Data Reports

As mentioned above, AI search is a constantly evolving space. It’s not 100% clear right now how much of an impact structured data (or schema markup) has on LLMs. It is possible AI systems may use schema markup (e.g. FAQ, Product, How To, etc.) to understand context and relationships. The following Lumar reports expose missing or invalid markup and highlight high-value structured content already in place, and so may also assist in optimizing content for AI search

- Pages with Schema Markup. All pages included in the crawl that have schema markup found in either JSON-LD or Microdata. Highlights any schema.org markup on a page.

- Pages without Structured Data. All pages included in the crawl that do not have schema markup.

- Product Structured Data Pages. All pages in the crawl that were found to have product structured data markup.

- Valid Product Structured Data Pages. All pages with valid product structured data based on Google Search Developer documentation.

- Invalid Product Structured Data Pages. All pages with invalid product structured data based on Google Search Developer documentation.

- Event Structured Data Pages. All pages in the crawl that were found to have event structured data markup.

- News Article Structured Data Pages. All pages in the crawl that were found to have news article structured data markup.

- Valid News Article Structured Data Pages. All pages with valid news article structured data based on Google Search Developer documentation.

- Invalid News Article Structured Data Pages. All pages with invalid news article data based on Google Search Developer documentation.

- Breadcrumb Structured Data Pages. All pages in the crawl that were found to have product breadcrumb data markup.

- FAQ Structured Data Pages. All pages in the crawl that were found to have FAQ structured data markup.

- How To Structured Data Pages. All pages in the crawl that were found to have How To structured data markup.

- Recipe Structured Data Pages. All pages in the crawl that were found to have recipe structured data markup.

- Video Structured Data Pages. All pages in the crawl that were found to have video structured data markup.

- QA Structured Data Pages. All pages in the crawl that were found to have QA structured data markup.

- Review Structured Data Pages. All pages in the crawl that were found to have review structured data markup.

- Confusing Heading Order (coming soon). Poor heading hierarchy can confuse AI content structure understanding.

- Non-HTML5 Pages (coming soon). Modern HTML5 structure may improve AI content parsing.

- Merged Table Headers (coming soon). Complex table structures can impair AI data extraction and understanding.

Relevancy Reports (coming soon)

The use of vector models enables semantic search to understand what is being asked, not just how it’s phrased. These reports will identify content with relevance issues, so they can be optimized to improve performance for AI search:

- Pages with Search Queries (coming soon). A positive signal indicating that the content matches user search intent.

- Pages with Low / Medium / High Query Relevance (coming soon). Indicating how well queries match content. Poor query matching reduces the likelihood of AI systems referencing content.

- Search Queries with Landing Pages (coming soon). Showing content that successfully matches search intent.

- Search Queries. Indicating search demand for content topics.

- Pages with Low / Medium / High Title Relevance (coming soon). Poor relevance may impact AI understanding of the page’s main topic.

- Pages with Low / Medium / High H1 Relevance (coming soon). Poor relevance may impact AI understanding of the page’s main topic.

- Pages with Low / Medium / High Description Relevance (coming soon). Poor relevance may impact AI content summarization quality.

- Pages with Low / Medium / High Content Relevance (coming soon). Poor content relevance reduces the likelihood of AI system content usage.

- Search Queries with Poorly / Moderately / Well Matched Landing Pages (coming soon). Poor query-matching may confuse AI content understanding.

E-E-A-T Reports

Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) is a set of criteria Google uses to assess the quality of content and websites. AI systems are likely to prioritize high-quality content in AI search results, so the following reports help highlight issues that may impact the authority, experience, or trustworthiness of your content:

- Pages with Backlinks. Pages with external backlinks, based on uploaded backlink data. Pages with backlinks are generally seen as more authoritative and trustworthy.

- Pages in Analytics. Pages that have driven visits, based on analytics data. Pages that receive organic traffic are likely to be authoritative and trusted.

- Indexable Pages with Backlinks. URLs that are indexable and have backlinks. A high number of indexable pages with backlinks indicates strong authority and trust from other sites.

- Disallowed URLs with Backlinks. URLs that have backlinks from external websites, but are disallowed in the robots.txt. Backlinks to disallowed URLs may indicate wasted authority or poor site management.

- Non-indexable Pages with Backlinks. URLs that have backlinks but are not indexable. Backlinks to non-indexable pages mean authority is not passed to visible content.

- Pages with Backlinks But No Links Out. URLs that have backlinks from external sites but do not link to any other pages may be seen as authoritative.

- Redirecting URLs with Backlinks. Pages that have backlinks but are redirecting to another page can dilute authority if not managed properly.

- Error Pages with Backlinks. Pages with backlinks from external sites and that don’t respond with a 200 status code or a redirect waste authority and harm trust in the site’s reliability.

- Pages with Meta Nofollow and Backlinks. Pages with backlinks that have a meta or header robots nofollow directive. Nofollowed pages with backlinks do not pass authority.

- Pages with High Domain Authority (coming soon). Pages on domains with high authority are more likely to be trusted and referenced by others.

- Mentions from Authoritative Domains (coming soon). Being mentioned or linked by recognized authorities in the field (news, .gov, .edu, industry leaders, etc.) is a strong signal of authority.

- Brand/Entity Consistency Across the Web (coming soon). Consistent Name, Address, Phone (NAP) and brand/entity information across the web (including social profiles) supports authority and trust.

- Presence in Knowledge Panels or Structured Data (coming soon). To identify is a site or author appears in Google Knowledge Panels or uses structured data (schema.org).

- Duplicate Page Sets. Sets of indexable pages that share an identical title, description, and near identical content with other pages found in the same crawl. Duplicate pages reduce the perceived expertise and originality of the site’s content.

- Duplicate Title Sets. Sets of pages that share an identical title tag, excluding pages connected with reciprocal hreflangs. Duplicate titles suggest low effort or expertise in content creation.

- Duplicate Description Sets. Sets of pages that share an identical description, excluding pages connected with reciprocal hreflangs. Duplicate descriptions indicate a lack of unique content.

- Duplicate Body Sets. Sets of pages with similar body content, excluding pages connected with reciprocal hreflangs. Duplicate body content undermines the site’s expertise and value.

- Pages with Duplicate Body. Pages with similar body content shared with at least one other page in the same crawl, excluding pages connected with reciprocal hreflangs. Duplicate body content across pages signals low originality and expertise.

- Duplicate Pages. Indexable pages that share an identical title, description, and near identical content with other pages found in the same crawl, excluding the Primary page from each set of duplicates. Duplicate pages harm the perception of expertise and content quality.

- Pages with High Word Count (coming soon). Pages with substantial word count often indicate in-depth expert content (but should be balanced with quality).

- Author/Contributor Presence (coming soon). Pages that clearly display author or contributor information (with bios or credentials) demonstrate real-world expertise and accountability.

- Content Depth & Topical Coverage (coming soon). Pages that comprehensively cover a topic (measured by semantic analysis or topic modelling) are more likely to be seen as expert resources.

- Presence of Supporting Evidence (Citations/References) (coming soon). Content that cites reputable sources or includes references is more likely to be trusted and seen as expert.

- Unique Broken Links. Instances of links with unique anchor text and target URL, where the target URL returns a 4xx or 5xx status.

- Broken Images. Images that are linked or embedded on your website but don’t return a 200 or a redirect HTTP response. Broken links reduce trust and signal poor site maintenance.

- Internal Broken Links. All instances of links where the target URL returns a 400, 404, 410, 500, or 501 status code, that undermine trustworthiness and reliability.

- Empty Pages. Indexable pages that are less than 512 bytes (this can be changed in Lumar’s Advanced Settings). Empty pages are a strong negative trust signal.

- HTTPS Pages. All pages using the HTTPS scheme, which is a positive trust signal.

- Pages with Mixed Content Warnings. HTTPS pages that include a script, stylesheet, or linked image with an HTTP URL, which undermines trust and security.

- External Redirects. All internal URLs that redirect to an external URL, outside of the crawl’s domain scope. External redirects can be abused and may reduce trust if not managed properly.

- Fast Fetch Time (<1 sec). All URLs with a fetch time of 1 second or less, which improves user trust and satisfaction, therefore supporting trustworthiness.

- Pages with Recent Updates (coming soon). Recently updated pages are more likely to be accurate and trustworthy, reflecting current information.

- User Reviews & Ratings (coming soon). Positive user reviews and high ratings increase trust

- Clear Contact & Customer Support Information (coming soon). Easily accessible contact details and support options show transparency and build trust.

- Privacy Policy & Terms of Service Presence (coming soon). Having clear privacy policies and terms of service is essential for trust

- Low Spam/Phishing/Deceptive Content Signals (coming soon). Any signals of spam will harm trust.

- Content Freshness/Update Frequency (coming soon). Regularly updated content is more likely to be accurate and trustworthy.

- Mobile Usability & Core Web Vitals (coming soon). Good scores on Core Web Vitals and mobile usability are now direct ranking factors and impact user trust.

- Presence of Ads/Interstitials/Disruptive Elements (coming soon). Excessive or disruptive ads/interstitials can harm trust and user experience.

- High / Moderate / Low Content Freshness (coming soon). Fresh content is more likely to be referenced by AI systems. These reports will indicate high, acceptable or low content freshness.

How Else Does Lumar Help?

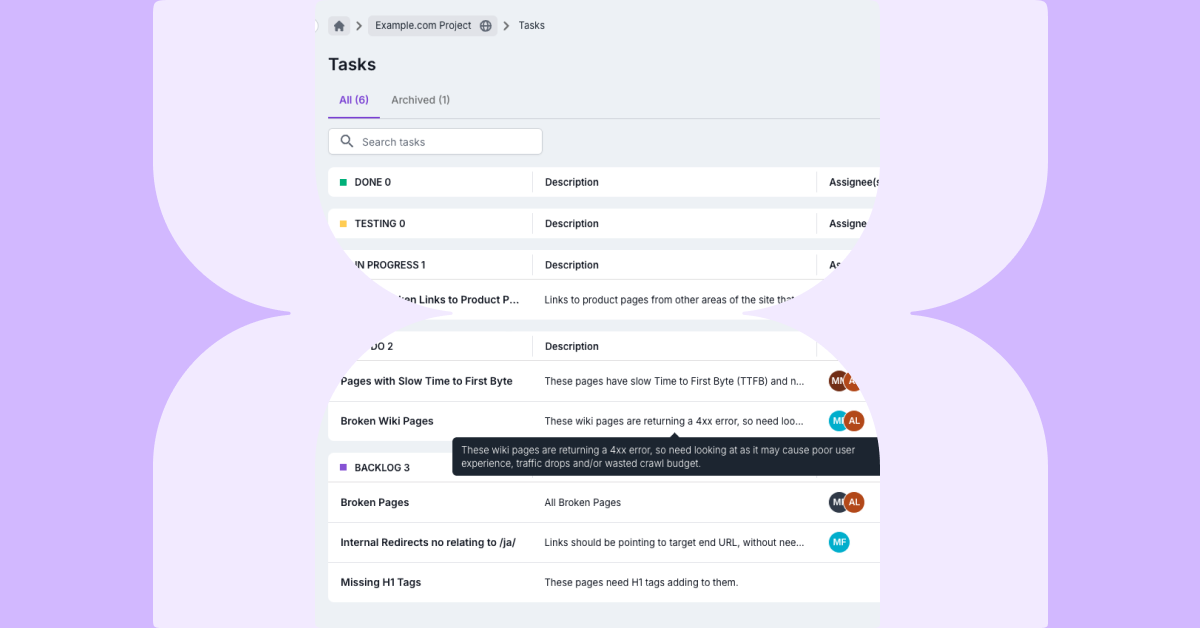

Aside from collecting and providing analysis at scale for your site, Lumar also helps you prioritize and action data to quickly identify, prioritize, and fix issues—and stop them happening again.

- Make the most important, impactful fixes first with logical grouping of issues, health scores and visualizations to avoid data overload. You can even engage our own industry experts to help.

- Save time, action tasks, and improve collaboration with AI-supported processes—like ticket content creation to ensure devs have all the information they need—so issues get properly fixed and technical debt is reduced.

- Stop issues recurring with automated QA tests and dev tools to prevent new code introducing issues.

- Mitigate risk with customizable alerts when issues do return or new issues appear, and customizable dashboards so you can easily monitor multiple domains, geographies, or important site sections in one place.

Find out how Lumar can help you optimize for AI Search

What’s Next in Lumar for AI Search?

As mentioned above, our team are working on a new GEO category, with subcategories for discovery, understanding and inclusion. This will include additional reports, analysis and improvements that relate specifically to GEO for AI search. We’ll update this article as we release new reports and analysis, but you can also sign up for our newsletter below to stay updated on what’s new in Lumar.