Search Leeds Recap Part Two: Technical SEO Focus

We learned so much at SearchLeeds that we split our recap into two parts! Part two is focused on technical SEO and covers anything from keywords, to site speed, to website audits, migrations and more. If you haven’t read part 1 of our recap, you can find that here.

Who is featured in this recap:

- Dawn Anderson

- Bastian Grimm

- Fili Wiese

- Stephen Kenwright

- Kelvin Newman

- Rachel Costello

- Luke Carthy

- Julia Logan

- Kristine Schachinger

- Steve Chambers

- Gerry White

International site speed: Going for super-speed around the globe with Bastian Grimm of Peak Ace

DeepCrawl CAB and founder of award-winning performance marketing agency Peak Ace AG Bastian, kicked things off on the SEO track at SearchLeeds to share his knowledge on how to make websites faster.

Performance = User Experience

For Bas, performance should be about user experience. As fast loading times play an important role in overall user experience and at their core, should be about making people happy. Which is why it’s no surprise that slow loading pages are a major factor in page abandonment.

Fast loading pages are also a great way of making search engines happy. Although Google are still using desktop metrics to score page speed at the moment, things will be shifted over to mobile for their Mobile First Index, in which page speed is definitely a ranking factor. You may have seen me share and update around this on twitter last week, as Google advised optimising your site to be faster for users and not just Googlebot:

Confirmed! #Google will determine #pagespeed based on #UX! Read on for more #SEO learnings from Webmaster Hangouts with the legendary @JohnMu here: https://t.co/hyPCpEVx7b

— DeepCrawl (@DeepCrawl) June 15, 2018

Getting the basics right

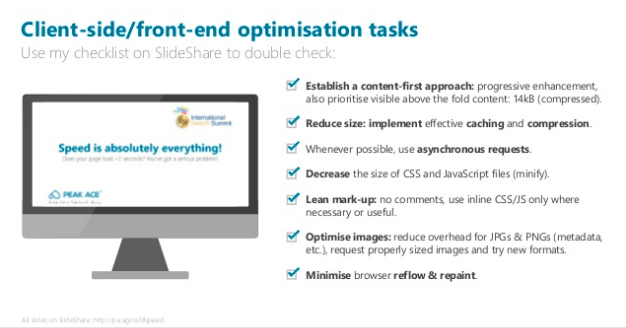

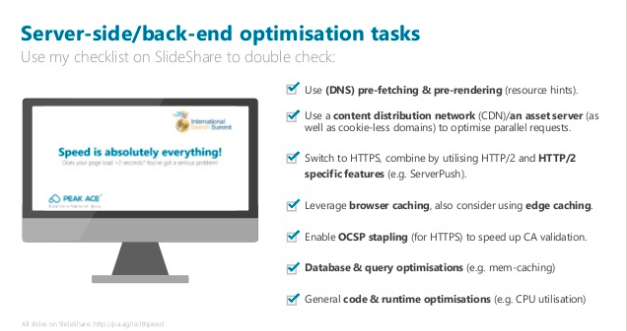

There are plenty of client side and front end optimisation tasks as well as server side and back end optimisation tasks you and your team can try today, to make sure you’re getting the basics right.

Everyone’s moved to HTTPS…right?

By now, many of us have migrated our websites over to the more secure protocol that is HTTPS, which means we have more site speed options available to us. Namely, moving to HTTP/2!

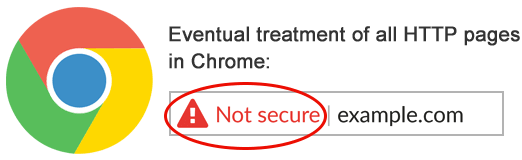

But for anyone who hasn’t, Bas recommends doing so before July which is when Chrome 68 comes in and all HTTP pages will be marked as “not secure.” Talk about a bad user experience…

If you don’t want your website to have pages that look like the example above, you can use DeepCrawl to check if you have any HTTP pages which will trigger a warning in your user’s Chrome browser.

For anyone interested in diving into HTTP/2, we suggest you take Bas up on his suggestion to check out Tom Anthony’s (who is Head of R&D at the award-winning agency Distilled) guide, which is famous across SEO land for its truck analogy. You can check out my BrightonSEO recap for more information on the benefits of HTTP/2 and help transitioning from condensed learnings from Tom’s talk, which one attendee –Jenny Farnfield– described as “my favourite explanation of HTTP/2 to date.”

Don’t forget to optimise your images for speed

A great way to start doing basic optimisation for images, is to use tools like tiny JPG or tiny PNG as seen below. These give you a super easy way to put those images on diet, without compromising on quality!

Another way you can save loads of MB in images, like 1.74MB out of 1.9MB, is by using a tool called Cloudinary. It makes for better image or video compression, combined with modern image formats, such as WebP, FLIF, BPG, and JPEG-XR.

Optimising images can lead to pretty impressive results. Furnspace doubled their numbers through image optimisation, as seen in their:

- 2x growth in purchase conversions

- 7% increase in share of revenue from mobile visitors

- 2x longer engagement time on page

- 65% faster web page download time – saving 11 seconds

- 86% reduction in image payload

CDNs can be a great help, especially for global business

For help finding the CDN that suits you best depending on where you are and which regions/countries you’re mainly serving to, check out CDNPerform. You should also test the latency of your CDN or server from all over the world.

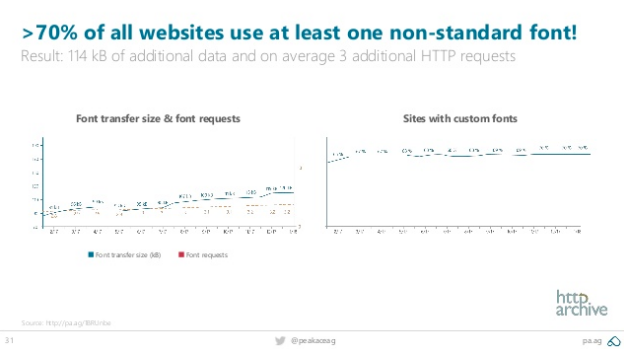

Custom web fonts are cool, but they’re usually slow

A majority of websites use a custom font, and this leads to extra baggage that slows things down.

Often times, we pick the easy option of using external CSS, but don’t realise that that is causes render-blocking.

Nobody likes lazy (AKA asynchronous) loading images

Both FOIT or flash of invisible text and FOUT or flash of unstyled text can cause annoying flickering, which annoys users. If you’re dealing with customised text you need to make sure it is the least annoying as possible.

You can fight FOUT content by using a font style matcher, or try using a font display strategy. Bas recommends checking out Monica Dinculescu’s SmashingConf talk called Fontastic Web Performance for blocking and swapping, as one line of code can make all the difference. If you only do one thing, go to your CSS file, look for @font-face and add ‘font-display:optional’ as this is the safest and easiest website performance gain!

Google Webmaster Trends Analyst John Mueller clearly advised against asynchronously loading content as it will delay processing and indexing, in this Google Webmaster Hangout last month, which you may have seen me share on twitter.

Don’t be lazy; asynchronously loading #content and links will delay processing & #indexing.

Don’t miss the latest #SEO advice from #Google‘s @JohnMu via @DeepCrawl here: https://t.co/cFxNcgLel2

— DeepCrawl (@DeepCrawl) June 1, 2018

Better SEO measurement

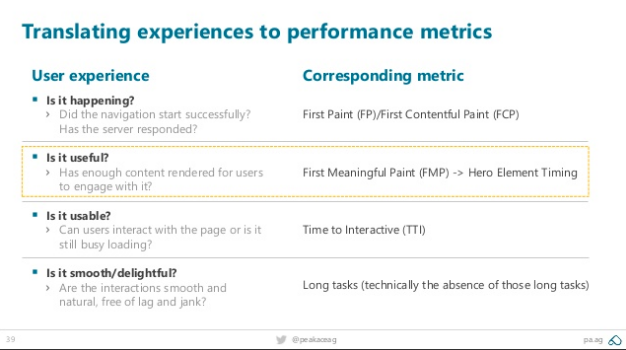

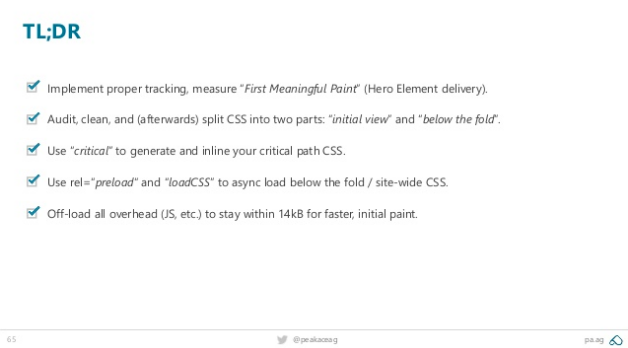

Don’t be lazy and rely on one metric to cover your bases, the page speed insights score is not enough. There are ways of tracking performance by comparing the received speed before and after.

One of the examples above ‘time to meaningful first paint’ makes a huge difference to user experience in terms of speed perception. To optimise these you can jump into Chrome Dev Tools>performance>profiling to check out ‘Frames’ for the different paint times. As well track paint timing with Google Analytics (in theory) to make sure your PerformanceObserver is registered in the head before any stylesheets, so it runs before FP/FCP happens. Oh, and make sure your homepage is fast!

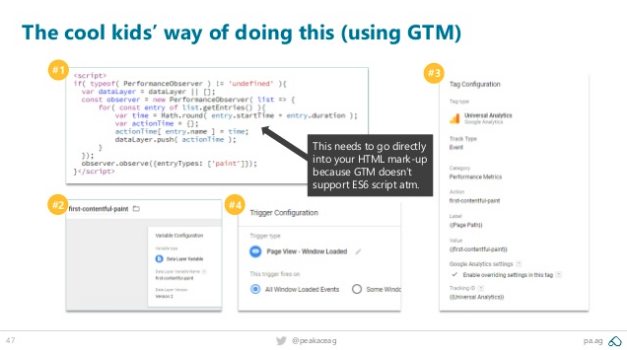

If you’re curious about how the cool kids are doing this, try using Google Tag Manager, which you can also combine with Google Data Studio, and unify paint time in a way that’s fun to use.

Google just introduced ‘first input delay’ (FID), which measures frozen phone states, or the time from when a user first interacts with your site to the time when the browser is actually able to respond to that interaction. When you have CSS/JS that’s slow, FID will show you how the CPU is going.

Critical rendering path

This covers the code and resources required to render the initial view of a web page, for everyone that’s accessing your site from different devices. But first, Bas gave us a bit of technical SEO/website development background into what this involves.

CSSOM: the CSS Object Model

*How elements are being displayed on the site – font colour, size etc

*Web browsers use the CSSOM to render a page

DOM: the Document Object Model

*Needed to paint anything in the browser

*Combined with CSSOM this helps browsers to display web pages

Google doesn’t make a single GET request for CSS files. Inlining the CSS is faster than having a single CSS file for each, and requesting external CSS is more expensive. That said you could approach it differently; by splitting up the CSS that’s required for the initial view, then having CSS for the rest of the site and using ‘critical’ renders in multiple resolutions and build a combined version. One for first view and one for rest, which works on every device, is bullet proof and the fastest way!

Let’s talk about AMP

For some, AMP drives discussion, innovation and enables collaboration as it becomes an agenda topic across stakeholders. BUT let’s face it, it also creates loads of extra work! As it involves creating new CSS/extending CMS’s and their capabilities, which increases IT and maintenance costs. Plus, the average user doesn’t really get what’s happening, and it’s not really open source (even though it’s on GITHUB). Google is very much in control on this one, and AMP isn’t really that fast! The Guardian’s regular responsive site is faster than with AMP, as Google is preloading pre rendered material – which feels faster.

If you have to use AMP, for example if you’re a publisher because you want to be on the carousel, that’s fine. Just don’t put AMP on a slow site.

If you’re looking for predictions on what has the most likelihood of being clicked to preload/pre render that, give Guess.js a go.

THE PAST, THE PRESENT AND THE FUTURE OF MOBILE with gerry white of just-eat

In this session Gerry, SEO Consultant for Just-Eat and co-founder of SEO Roundtable event series Take it Offline, walked us through the history of the mobile web through to where we are today in terms of the key technologies as well as ideas about the future.

How things have changed

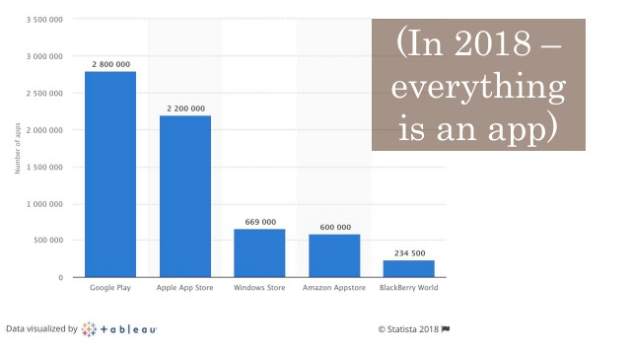

From early Nokia’s like the 7110 in 1999, to the rise of 3g and the iPhone. Unlike competitor devices, the original iPhone didn’t have GPS, but it did have the app store, which is what changed the game. Companies began optimising for apps, rather than optimising for users. This data visual by Tableau sums it up quite nicely.

The down-side of this reality is that 60% of apps have never actually been installed.

If you want better user experience, stop annoying people

Like Bas, Gerry stressed the importance on shifting our mentalities towards UX. Case in point being to stop annoying people with interstitials. As marketers, a lot of us have stopped respecting users, as companies are pushing apps for everything…at the user’s expense.

Let’s talk about PWAs

For Gerry, PWA’s are awesome. If you’re looking for real-life examples of companies that have built these especially well, check out airhorner, which is an app that actually just makes the air horn noise. As well as tinder, which is an app for, well…you know 😉

To optimise your PWA it’s worth referring to Google’s PWA checklist, and pay attention to ‘manifest JSON LD’ which is like an xml sitemap as it’s a small text file where you can choose how it looks as an app, and ‘service worker’, which intercepts how you as the website interact with the server, where you can choose what works offline. You can inspect this using Chrome Lighthouse, Google gives you the code for this and your devs can implement it. Hurrah, gone are the days of expensive app developers!

Lest we forget performance = user experience

In case you didn’t know, the level of stress caused by mobile phone delays is greater than a horror movie. Don’t believe it? Check out this study by Nichola Stott’s technical marketing agency Erudite.

A new programming language that could help you with this is ‘web assembly’, which is nearly as fast as running native machine code.

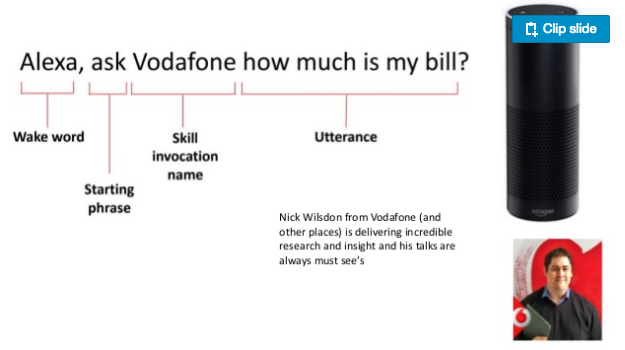

Voice search

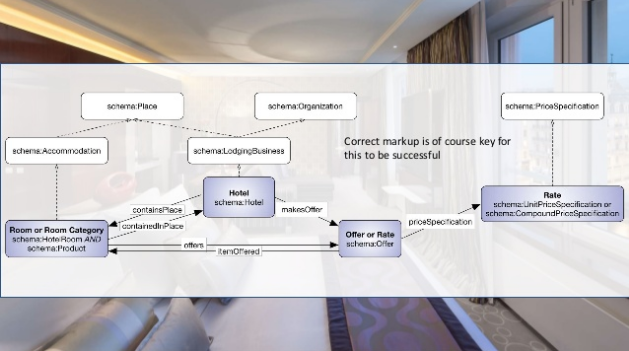

As Gerry noted, alexa is changing things. In fact, 5% of homes have one, but in reality most people just use Siri to ‘call mum.’ He reckons Google Assistant is actually pretty good, but to make it and all of these devices even better, you need to put schema into things so they can:

*Get picked up

*Pulled into the app

*Served to home device voice search users

For best practices on entity-first indexing and mobile-first crawling, check out this awesome 5-part blog series explaining exactly that on Cindy Krum’s blog for Mobile Moxie.

Futuristic user experience examples that rock

Did you know you can now order an Uber from within google maps? That is both great technology and great UX. As, even if you don’t have the uber app, you can still benefit from it! There are even more excellent examples of this in the research of DeepCrawl CAB Nick Wilsdon who is the Search Product Owner of Vodafone.

HOW TO NOT FUCK UP A MIGRATION BY STEVE CHAMBERS

Steve is an SEO Manager at the award-winning SEO and content agency Stickyeyes. He walked us through the do’s and don’ts of the migration game.

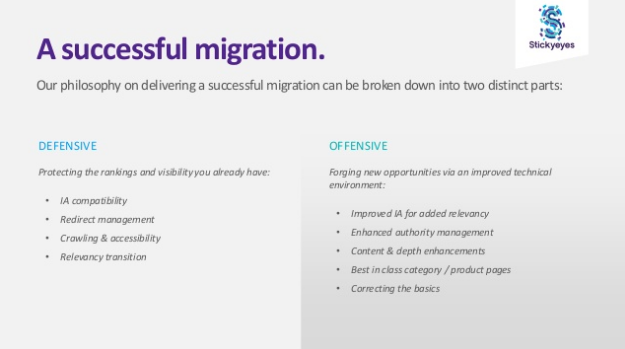

Goals

I know it’s tempting, but don’t focus on maintaining traffic rankings and revenue alone. Think about how you can improve them. Stickyeyes breaks this down nicely into two parts:

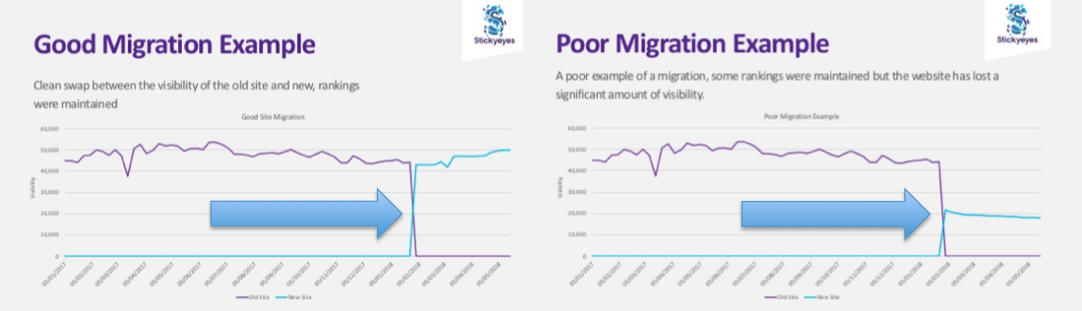

And shared an example of the striking differences between doing this well, or not!

Pre migration planning

It’s important to set responsibilities from the getgo, AKA who is doing what. This is also an opportunity for overcoming silos, as getting the UX, design, analytics, content and SEO teams onboard from the beginning points out how important each of these areas are to a successful migration. Moreover allowing you to ensure resources are available where necessary, for example ensuring that the one person needed for a major task is going to be away when that tasks is scheduled to to be done. And if they are, and you’re mapping 1,000s of URLS, make sure you have enough people to help who aren’t on holiday in this period!

A great way to smooth this process out is by using project planning software, to manage you/your various teams time. You can even create JIRA tickets automatically based on the changes to your website that matter to you most using the DeepCrawl Zapier Integration. For example an increase in Broken Backlinked Pages Driving Traffic, Non-Indexable Pages with Search Impressions or Broken Canonical/JavaScript Redirect Pages with High Bot Hits.

You should share your pre migration plan with key stakeholders, taking care to be clear and concise. A good checklist is not to be snuffed at, it can really help make sure you don’t forget anything, such as having Google Search Console and Google Analytics checks.

Protip 1, don’t do it on a friday, maybe migrate at a low traffic time like a Saturday at 4am!

Pre migration actions

There are a lot of things to think about when it comes to your Information Architecture (IA). Such as:

- How deep do you want things to sit?

- How does one area flow with the greater makeup of the site?

Being conscious of your IA is important, and an SEO needs to be involved in this process. For gathering URLs, you need to capture as many as possible in order to map out the new site structure well, for which Steve recommends using DeepCrawl. He also recommended checking your log files, using an analyser like Screaming Frog. Did you know you can upload your Screaming Frog log files into DeepCrawl for an added data later? This is a great way to see how or where your crawl budget is being used or misused!

Redirect mapping

This is crucial to any migration to make sure URLs are mapped to their new counterparts correctly. An easy way to do this is by analysing your live and staging environments in the same view using DeepCrawl, to see what has been mapped where so far and see all of your legacy redirects in one place too.

Google Search Console Configuration

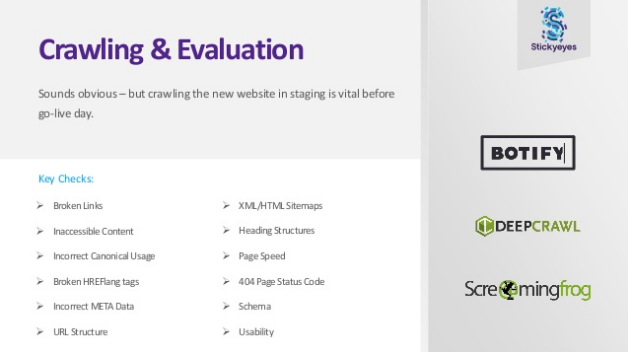

Make sure your target country is specific and that all HTTP HTTPS www variants are added, by crawling and evaluating the key checks such as finding broken links with unaccessible content, incorrect hreflang or canonicals, their status codes and more.

Protip 2, double or triple check everything!

Before you migrate, it’s worth your time to do a link profile review. For help on this, I’d recommend you take a look at the Crawl Centred Approach to Content Auditing by Sam Marsden on State of Digital. As well as checking your internal links, it’s also a good idea to assess your ranking benchmark for keywords by using a tool like SEOmonitor or Stat Search Analytics.

Protip 3, don’t panic!

Post migration checks

Make sure to check your:

- Robots.txt to make sure you’re not disallowing your whole site

- Testing redirects to make sure there’s no canonicals linking to staging URLS or live URLs that are broken

- Fixing redirect chains and loops

- Analysing log files

All of which you can do with the tools below.

Steve also advises you use GSC and/or DeepCrawl for monitoring and testing, and fetching and rendering to make sure Google is seeing the site properly on both desktop and mobile. As well as looking at hreflang to see if it’s correct – especially given how often hreflang is messed up. If you’re working on international sites, it might be worth migrating one site at a time.

Use GSC to make sure you’re not missing/blocking and scripts that are important which might affect the rendering of your page. Try using the Page Speed Tool, Pingdom or GTmetrix to benchmark your new site against your old site, and make sure your site is mobile friendly, including making sure text is readable that clickable elements are set up correctly, and your structured data has been reviewed.

Performance monitoring: competitive landscapes are useful, as is checking organic traffic over time, conversion rate and engagement metrics – hence having your UX team involved.

Protip 4, celebrate success – migrations can take ages, reward yourself!

structured data explained with fili wiese of search brothers

Fili is an ex-Googler, co-founder of Search Brothers agency alongside Kaspar Szymanski, a DeepCrawl CAB and well known SEO expert. His session reviewed the impact of structured data on Google SERPs.

The perks of structured data

Structured data helps with click through rate (CTR), which means it also helps drive more people to your site. It can also be used to reduce duplicate content, in other words telling the big G that the same specific data is related to products that are separate, giving Google better context about your site.

Prioritise your time with SEO areas that matter

- Webpage; WordPress is using it, but none of the search engines are

- HATOM (markup: microformats.org) often this comes from wordpress too and you can safely remove it and replace it with other structured data breadcrumbs and update your schema.org list – Fili suggests you ignore Webpage and HATOM

- Google Tag Manager; render on the page through a javascript extension which is a good way to test schema but is not a long term solution, as every time google crawls the page they’ll be trying to render the JS which creates instability you don’t want to afford

- Same goes for data highlighter (part of Google Search Console) which is great for testing but not long term as only google will know about it as it doesn’t work for other search engines like Bing or Baidu, if you do use it for testing don’t forget to remove it afterwards

- Use inlay in the html for inputting schema markup and avoiding duplication

Bing supports JSON-LD, and they even have a json validator new tool. But this is far from being ready, in fact it’s very buggy and only picks up the first 8 or so schemas it finds even if there are 200 on your page!

It’s worth having a look at pending schema.org, as the community takes recommendations for future schema, this is quite powerful.

Base guidelines

Have a unique sales proposition and add value to the user from a content perspective, don’t markup hidden content or misleading content. Google doesn’t like this, for example when a website has a review in the footer of a page which isn’t a true representation of the content. To avoid no no’s like this, it’s important to be aware of Google Webmaster Guidelines, like adding structured data and then block the pages you had marked up in your robots.txt. It sounds simple but people do it a lot, and if you do it – Google can’t see it!

Do use JSONLint to check if your codebase is working.Avoid mismatches – as SEOs we need to check these things ourselves as well as using tools, including checking for mobile desktop parity. This will only be more and more important as we move towards the Mobile-first Index.

Things to remember about structured data

- Can have a positive impact on CTR, though it doesn’t directly provide ranking boosts

- Can give important additional context to information for Google to better understand your site

- Isn’t guaranteed to be used by Google

- Helps Google de-dupe content

ENTITIES, SEARCH AND RANK BRAIN: HOW IT WORKS AND WHY IT MATTERS with kristine schachinger of Sites without Walls

Kristine is the founder of Sites Without Walls and a well known writer on the SEO circuit and speaker at events like SMX Advanced and SMX East. Which is why it came as a surprise to many folks in her audience that this was her first time speaking this side of the pond. And she smashed it! She gave us an overview of Natural Language Processing (NLP) algorithms, the Knowledge Graph (not the Knowledge Graphs us SEOs are busy optimising for), Google’s Wonder Wheel and more.

Oh how the web has changed

Kristine walked us through the beginnings of Google and the paper their founders published on the anatomy of the web, before Google was even online. Fast forward to today and there is so much data out there! Did you know there are 63,000 searches per second?

A history of Google

Google was founded on unstructured data, which is just text based data, this basically just involved keywords put in the title and keywords everywhere, gone are the days of black hat rankings, but as queries moved up into the trillions this kind of system quickly began to not make sense, and data needed to be structured. So Google moved from relational databases to Knowledge Graphs and we entered Semantic Search, which gives meaning to what is on your page. This helps the search engine try to derive meaning from your page and understand intent.

Google shifted from a ‘bag of words’ approach to ‘things’, aka structured data – where things are known objects with known or learned relationships. The Wonder Wheel helped see how things are connected. It’s worth distinguishing that Knowledge Graphs are based on known relationships and THE Knowledge Graph is Google’s database. The latter enables you to search for things, people or places that Google knows about, like landmarks, celebrities, cities, sports teams etc. Basically NOUNS, nouns=entities.

Where Google is headed

Google is moving towards Entity Search and from there NLP, using the developer API for entities to look up what can be entered in the Knowledge Graph. Answers from the Knowledge Graph are things like direct answers, featured snippets, rich lists etc. Hummingbird helped string to things and is faster/better at search, as it added semantic layer of meaning and understanding to search. For example knowing that things placed next to each other probably meant something, like having ice and t next to each other most likely signifies Ice T.

But, as Google doesn’t do NLP or process information like we talk, they need an interpreter which is structured data and schema. Page markup allows engines to better understand what a page is about. JSON LD is the recommended sham code; one of its benefits is it can be removed from the HTML structure so its easier to write, implement and maintain, plus it sits inside your page as opposed to your html. For best practices on this, use Google’s guides and schema.org to find everything that is supported. Google makes it simple to understand what they support, as well as what causes manual actions, such as when JSON/what’s on your WordPress page doesn’t match as Google sees this as cloaking. For unknown queries nowadays, we have Rank Brain which is useful when entities meanings are unknown.

Power from what lies beneath…The iceberg approach to SEO with Dawn anderson of move it marketing

Dawn is the Managing Director of Move it Marketing, an Associate Lecturer on Digital Marketing and Marketing Analytics at MMU and a DeepCrawl CAB. Her session focused on the evolution of information retrieval and the rise of mobile search.

Takin it to the (search) meta!

Dawn walked us through relational theory, the many layers to the mindset of the searchers, and how that relates to what Google is trying to achieve with its algorithms. She introduced many of us to Mizzaros Framework for Relevance, which relates to user problems, time, and components and its relationship to SEO. She also outlined Matt Cutt’s work in the area of information retrieval as well as Andrei Broder’s. He contributed a lot like Matt, but didn’t speak to SEOs directly. He made his mark with ‘assistive AI’. If you’ve ever come across the terms ‘Informational’ ‘transactional’ and ‘navigational’ in relation to Search, these are the names of the types of queries people use, and were created by Andrei.

Interested in learning more? Here’s her recommended read: A Taxonomy of Web Search.

More choice more problems

We’re living in an age of ubiquitous computing as the average person has more computing power in their phone than we used to be able to provide from a whole room. Though an incredible amount of data is available to us, it also means we are weighed down by it. Think about Choice Theory, the notion that the more choice we have the less we buy, as we can only really cope with about 7 items at a time. Which is why we need to think about SEO like an iceberg.

The Iceberg Syndrome

It’s not simply that people won’t just scroll beneath the fold because people think what’s below is more of the same. They won’t scroll because they have ‘iceberg syndrome’. If you have things like ‘view more’ you need to help them understand what that is, so they actually go for it. The combination of iceberg syndrome and reasonable surfer means Google will follow the links people are likely to click, using predictive JS. So, be careful how you code things – especially on mobile.

The gist of it is less is more, don’t give people war and peace on an eCommerce page 🙂

Work with people’s low attention spans, use informational clusters and working memory chunking, or clustering related things together. It is important for your search UI to be agile and able to be redesigned over time with adaptive content and responsive user design. Even more so on mobile, where your UI can easily overwhelm the mobile-first user in what Dawn refers to as an ‘attention economy’ and remember to make your navigation as clear for bots and easy to use for humans as possible.

Do:

- Use concise brevity and directional heuristics

- Use h2 and h3s in your table of contents as it helps to semi structure data

- Remember Gestalt’s principles – our minds are thought to fill in gaps, think about chunking like how you put phone numbers in chunks or clusters on your business card as opposed to written all together

- Avoid long form content on things like eCommerce – but that said don’t just have pictures, be interactive be adaptive think Gestalt!

- Use h2 h3 headers and xml sitemaps because ordered lists are all things that add structure to a page and add power

- Use jump pages – these are really powerful, aviation is king for humans and search engines, use different forms of navigation, be careful with pruning so you don’t lose the topical value and relatedness of your site

How to Optimise Internal Site Search – Luke Carthy of Mayflex

Luke Carthy is a Digital Manager at Mayflex, and unbelievably it was his first time speaking on the SEO circuit! He gave us juicy examples of how badly SEO has been done and why internal search SEO is essential.

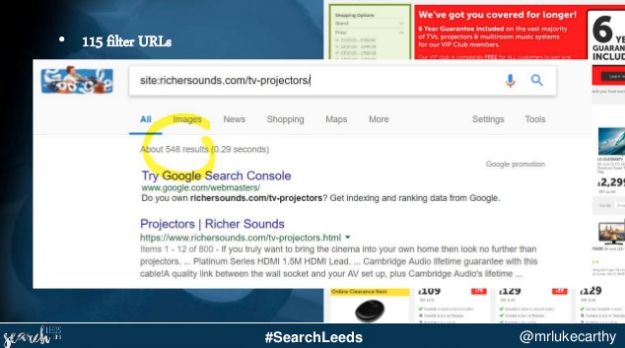

Luke gave us tips for comparing search categories, by using site colon to do a deep dive to find an indication of how many URLs are indexable in google search.

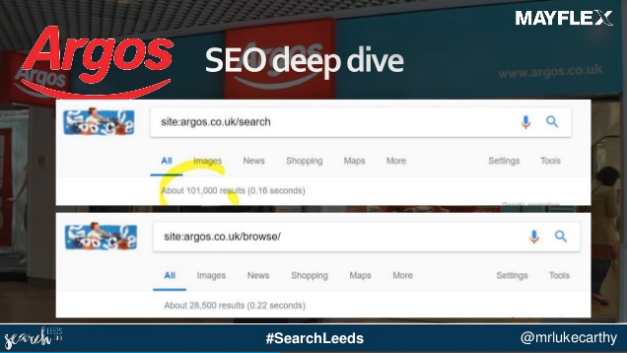

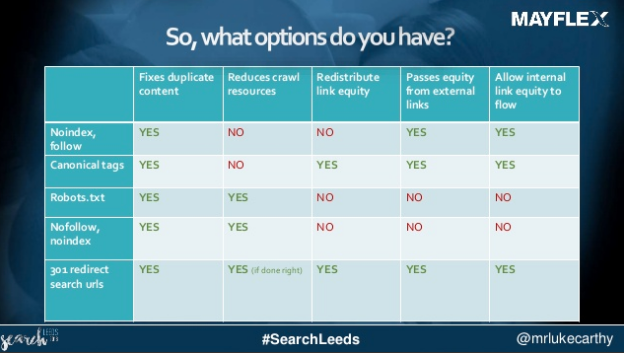

As well as comparing search to browse and assessing your options, as seen below.

Luke walked us through how best to approach SEO issues like duplicate content, redistributing link equity and how to pass this from internal versus external links, as seen in the below.

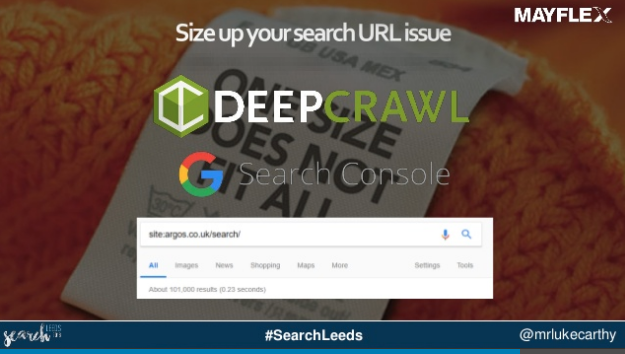

He also recommended using Ahrefs to see rankings/backlinks, and using DeepCrawl and Google Search Console for sizing up your search URL issues. We’d recommend using a combination of both, as you can manually upload backlinks from any provider into DeepCrawl, and/or avail of our free direct integrations with Majestic, Google Analytics, Google Search Console, Logzio, Splunk to get a really comprehensive view of your individual URLs and site structure as a whole!

Luke pointed out that just because they’re not in the index, doesn’t mean Google hasn’t found out about them. That’s why there’s a discrepancy between how many links are in Google Search Console versus how many are getting indexed! Identify link juice to search URLs using a combination of Ahrefs and search console, and look at URLs that are 301-ing with worthy backlinks.

Do:

- Identify URLs with organic traffic generating sales with GSC to figure out which URLs are getting traffic; there’s a difference between URLs getting backlinks v getting links which could be the difference of what keywords are used!

- Prepare to lose LOADS of URLs (provided they’re not precious to your site!) with a combination of no-follow and no-index (and feel free to test your robots.txt with DeepCrawl!)

- Change out internal links pointing to search URLs – make sure you pass the equity to the right place

- Produce more content where necessary; if certain content proves successful and you need an alternate landing page that doesn’t exist yet – make one! if something inideal is ranking; create meaningful content for that

Don’t:

- Be afraid to massacre millions of search URLs at once

Conflicting Website Signals and Confused Search Engines by Rachel Costello of DeepCrawl

Rachel is the Technical SEO Executive at DeepCrawl, and frequently contributes to the SEO community with content like this Mobile First white paper.

She started off by discussing how Google tries to make sense of the chaos out there, and how it isn’t perfect. Which is why we need to help them read our sites better, so they can serve better content to our users. As John Mueller said in his response to my tweet:

“Don’t confuse #Google unnecessarily.” sums up most of SEO, right? 🙂 https://t.co/aSuPJ5eCeN

— John ☆.o(≧▽≦)o.☆ (@JohnMu) June 4, 2018

Things to remember

- Canonical tags are a signal NOT a directive, and if Google have a better idea they’re going to override that decision

- Google doesn’t always do as they say; as the case with canonicals

- Embed the signals Google uses for URL selection and avoid duplication

- Make sure internal links point to canonical URLs

- Parameter handling is a stronger signal for Google than a canonical itself, as this really tells them what to crawl or not crawl

- Bear in mind that just because something is in a sitemap doesn’t mean it’s going to be indexed!

- Different signals have different amounts of power – choose yours wisely

- Don’t waste crawl budget

- The key signals are actually strongest in combination

- Test your websites signals to check inconsistencies, redirects and canonicals to broken pages or sitemaps or search impression distribution with Google Analytics and GSC to find non indexable pages getting impressions or pages you didn’t want to/plan to be indexed that found their way into the SERPs and people are landing on, looking for or being served because they’re useful – fix them make them indexable if they’re worth it!

- Fix duplicate pages missing canonical tags

How to audit your site for security with julia logan of irish wonder

Julia from IrishWonder SEO Consulting walked us through where websites are vulnerable to hacking and security issues, as well as providing actionable tips on how to perform security audits to keep your site safe and secure.

Why being proactive is key

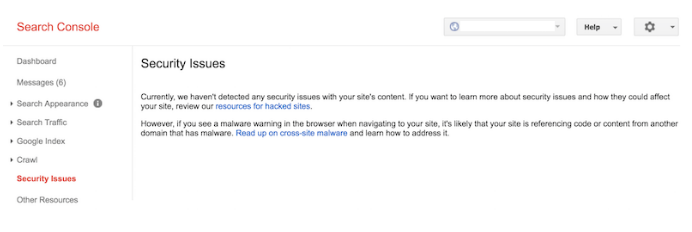

By the time you see a security warning in Google Search Console, it could be too late to save your website.

This is why you need to be proactive when it comes to keeping your site secure. You need to incorporate basic security checks into your SEO audits to make sure you’re regularly up to date on your site’s status and safety.

Why do websites get hacked?

Some of the most common reasons for website hacking include:

- Wanting to feed off the rankings and traffic from well-established domains. Parasite pages are created and attached to high quality websites and are used to funnel authority back to hacker sites

- For the purposes of crypto mining which involves infecting website users with malware

- Hackers try to gain access to multiple sites through targeting a particular CMS. For example, WordPress has around a 53% share of CMS use and sees a lot of targeting for hacking

How to perform a security audit

You need to monitor your website and any hacked content that may have been added. Take responsibility for your own vulnerability or security issues, as Google won’t be able to save you.

You are the only person responsible for your site’s security ☝️ @IrishWonder at the #SearchLeeds #SEO conference organised by @Branded_3 pic.twitter.com/391YHFp39l

— Omi Sido (@OmiSido) June 15, 2018

Here are Julia’s tips on performing security health checks:

- Check what is being indexed: Analyse what is appearing in the SERPs for your website that shouldn’t be, whether that’s parasite pages or your own data leaks (such as content containing passwords or customer information)

- Investigate known vulnerabilities: Analyse any known issues with the platforms, plugins and technologies you’re using which may have existing vulnerabilities that have been experienced by you or others

- Audit your plugins: If you have so many plugins that you don’t remember what each one does, you’re doing something wrong. The more plugins you have, the more complicated a system gets, meaning it is more likely to fail and succumb to security issues. Make sure you remove any unused plugins

- Review system access: Does everyone who has access to your system really need it? For example, does a copywriter need full admin access to the entire system? The more people with access, the more vulnerable your setup could be

- Validate your SSL certificate: Having an SSL certificate may mean you have a secure connection, but you can still be hacked even if you have one. Check for any issues and make sure it is installed correctly. And remember: your SSL certificate is only as secure as the resources you link to

- Look for significant speed regressions: If your site has become noticeably slower without any changes being made on your end, this could be a sign of hacking

- Analyse your log files: Check your log files for any unusual activity or URLs being accessed that you don’t recognise

- Monitor your backlinks: Use tools like Majestic to see your indexed and linked pages, as this will flag any parasite pages on your site that are being linked to by hacker sites

- Assess Google Search Console data: Look out for any unusual queries, URLs or crawl errors that have appeared, as this could also be a sign of hacking

What to do when you find out you’ve been hacked

- Change all passwords on anything on your system

- Remove vulnerable elements on your site if there’s no existing fix, such as running software updates for plugins and themes

- Check if your mail server is affected as you won’t be able to send out your own legitimate emails in this case

- Clean up the SERPs by re-submitting your XML sitemap with only clean URLs within it, and remove any persistent problem URLs through Google Search Console

- Don’t delete anything before you find out what’s happened. If you delete anything on your server you may identify as a hack without investigating further, you won’t be able to understand how it happened or the full scale of your site’s vulnerability, which means the hackers can come back and do the same thing over again

- Act quick, but don’t panic!

Important things to remember about security

- Linking to HTTP could mean a gap in your implementation. Check outdated HTTP links to resources such as Google Fonts which should have HTTPS links

- Always have a clean backup. This needs to be done before you even get hacked. If you find that your site is clean after you do a health audit, make a clean backup and store it safely on a separate server from your site, so it’s there if you need it for any future security breaches

Julia recommends using the following tools for security auditing: WPScan, Pentest-Tools, Drupal Security Scan, Sucuri, Qualys SSL Labs. Remember, some custom platforms need manual checks and don’t have tools that work for them.

HACKY WAYS OF PINCHING KEYWORD INSIGHTS FROM YOUR COMPETITORS with KELVIN NEWMAN of brightonSEO

Kelvin Newman is the founder of Rough Agenda and the biggest search even in the UK, probably Europe, and hey maybe even THE WORLD: BrightonSEO. In this presentation Kelvin shared practical ways of coming up with keywords you can target as well as what not to do.

More is more

We are moving away from the old model of is the search query on the page and does the page deserve to rank, and if you get those right everything is ok. And are now using the new model of does it contain the search query but also include the phrases that rank for that term and does it deserve to rank.

Kelvin reminds us to not be lazy. Don’t just write for your users and assume that’ll cover your keywords! Instead understand what users and competitors are doing. Do more than add key phrases – have all the phrases and words they’d expect.

Methods we can all use

Use Textise.net and look into the overlap of words or phrases competitors are using that you’re not, best with 3 competitors only (think of a venn diagram and how well that’d work out with more or less) so you can see where the competitors overlap and you don’t!

Try using a combination of word clouds and twitter feeds, and word clouds and Pinterest to get great adjectives.

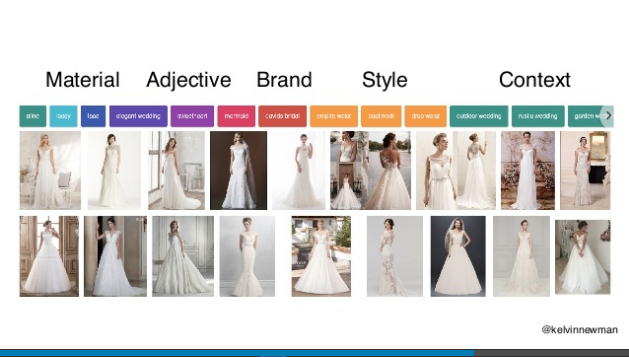

Use image search to see what also comes up. Things that won’t show up by using tools alone, like Answer the Public. By going a few levels deep and even digging into the categorisation of search queries people are making, like colours or materials for clothing, you can find even more queries to optimise for.

Customer-centric search: serving people better for competitive advantage with Stephen Kenwright of Branded3

Stephen is the Director of Search at award-winning agency Branded3, and the founder of Search Leeds. His talk helps us reflect on helping your business thrive by putting your customers at the heart of it, and how optimising for them helps us win in search.

Let’s get customer-centric

Stephen advises we:

- Don’t just create content that’s good for links – but to put users/customers at the heart of what you do

- Remember that users rely on authorship and freshness for fact checking sources

- Embrace the power of YouTube, people are even moving from BBC to search from youtube instead as youtube is a big search engine

- Meta data – keep up with changes in lengths which Google demands now and again

- HTTPS – people and google like secure websites, these also people convert better – 2/3 of people won’t give any information to a website they don’t see as secure i.e. no padlock

- AMP is this year’s Google+ as it’s the thing that Google wants but nobody else wants, so don’t mind that, just make websites faster!

- Tool tips: Use gtmetrix and test my site to see how faster site is, and compare sites against competitors using speed metrics as well as security like what protocol are they on or what he refers to as the ‘strategy canvas’ – gaps and opportunities

- Don’t hate on GDPR, use its data to your advantage: Now is the time to analyse who are your fans by looking at who has re-opted in to make more user centric and informed marketing for them and cater to them as an audience

- SEO is a business case not a channel its effected by and intersects with every other department in the business IT PR PPC CONTENT MARKETING SALES etc

Closing time

If you made it this far into this beast of a recap post, I salute you and I thank you.

If you were there on the day I hope you had as much fun as we did, and were able to stop by the DeepCrawl bar.

Oh, I do love me a @deepcrawl conference bar…. #searchleeds pic.twitter.com/5jVWyKMSPU

— Stacey MacNaught (@staceycav) June 14, 2018

If you were not able to attend SearchLeeds, I hope you were able to take some value from this piece that you can apply in your SEO adventures, big and small.

Last but by no means least, thank you to Stephen, Charlie, and the entire team at Branded3 for putting on yet another killer event. To quote Arnie, “I’ll be back!”

Be the First to Know About the Latest Insights From Google