Ensuring that your eCommerce website can be easily crawled and understood by search engines is crucial to optimizing your site and ensuring your pages are in the best position to rank. There are some common issues we see on eCommerce sites that can be readily fixed to make sure your site isn’t being bloated by unnecessary, non-indexable, and duplicate pages.

Correcting these 5 common issues will help search engines focus on the pages you want to be ranking.

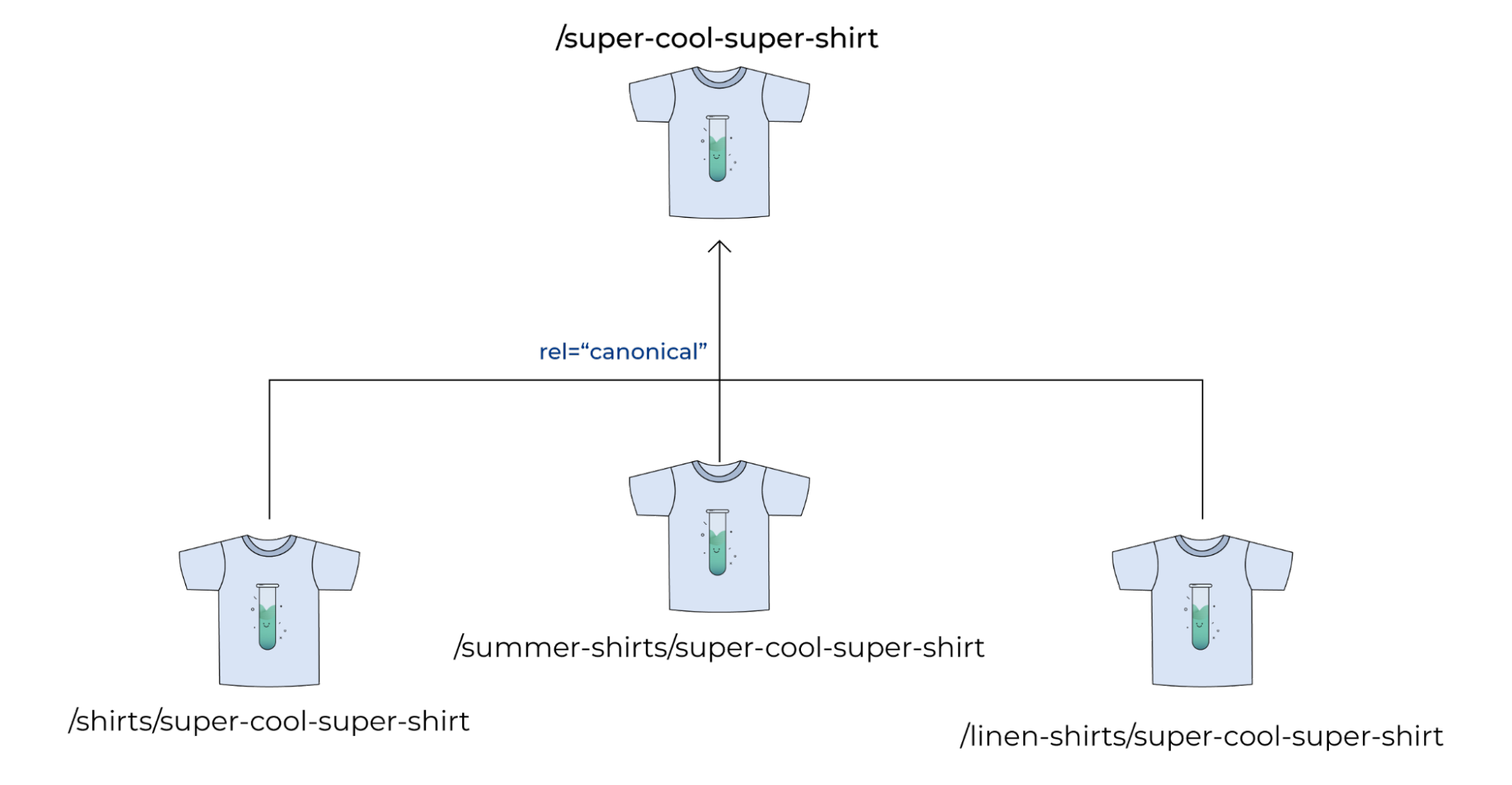

eCommerce SEO Mistake #1: Linking to canonical product pages

Many eCommerce websites have product URLs that contain the category the product belongs to. This is a common and often default setting on many popular CMS, for example:

Product – 32kg Kettlebell

Simple URL – example.com/32kg-kettlebell

Category URL 1 – example.com/kettlebells/32kg-kettlebell

Category URL 2 – example.com/weights/32kg-kettlebell

Category URL 3 – example.com/home-gym/32kg-kettlebell

The multiple-category URLs often canonicalize back to the simple product URL to ensure there are not several URLs competing in the SERPs (you should always check that a canonical is in place). However, this still produces additional URLs to be crawled, when in fact only one is needed.

Here’s another example using super cool super shirts, showing how product category paths in URLs can create multiple URLs for a single product:

Across an entire product range for an eCommerce website, this can result in thousands of URLs being crawled unnecessarily. Most CMSs allow you to choose to use the simple product URL rather than those with the category path. This results in a much leaner website, in addition to having the internal linking benefits of linking directly to the canonical URL, and not relying on canonicals to pass value.

(N.B. keywords in URLs.)

eCommerce SEO Mistake #2: Keeping outdated pages live

For eCommerce websites that frequently change products, ensuring you have a solid out-of-stock (OOS) strategy in place is important to make sure your site is optimized for both customers and search engines.

Over time, discontinued or out-of-stock product pages can build up — resulting in a frustrating experience for customers if they reach those pages via search engines (or even worse, via your site itself!).

This can also bloat the number of pages that are being indexed and crawled. Ensuring you have a process to deal with discontinued and OOS products is crucial to keeping your site lean. The exact process you opt for will depend on the products you sell, your site, and your industry.

Generally speaking, we recommend an OOS strategy along the following lines:

Discontinued products

- If your discontinued product pages still have search volume (either branded or non-branded), keep the pages live and offer customers related products or an email sign-up form on the page.

- If these discontinued products do not have search volume, either look to redirect them (particularly if they have referring domains) to a relevant category or leave to 404/410.

- Remove internal links to the products.

Out-of-stock products

- If your OOS product is coming back soon, this page can either be left as-is or, ideally, you can add related product suggestions and/or an email sign up form.

- If the product is permanently or semi-permanently OOS, you may want to 301 or 302 redirect this to a relevant category page. Ensure internal links to the OOS products are removed so customers do not accidentally land on the page.

eCommerce SEO Mistake #3: Legacy redirects & 404s

Just like old product pages, redirects or 404s that are still being linked to internally can accumulate in large numbers over time, bloating the site and making it harder for customers and search engines to navigate your site.

Reviewing your eCom site to ensure that redirects are not internally linked to, as this can slow down crawlers and increase the time it takes for pages to load, increasing bounce rates and reducing conversions rates, in addition to bloating your site.

Identifying 404s is arguably even more important to ensure that search engine crawlers are not stopped in their tracks when crawling your site and that customers do not run into an end to their journey. Redirecting legacy 404s and updating internal links to the new final destination (or removing the internal links to 404s if they are determined to indeed be appropriate to 404) can help keep search engines focused on the resolving pages you want to rank and keep customers focused on their goal (and more likely to convert).

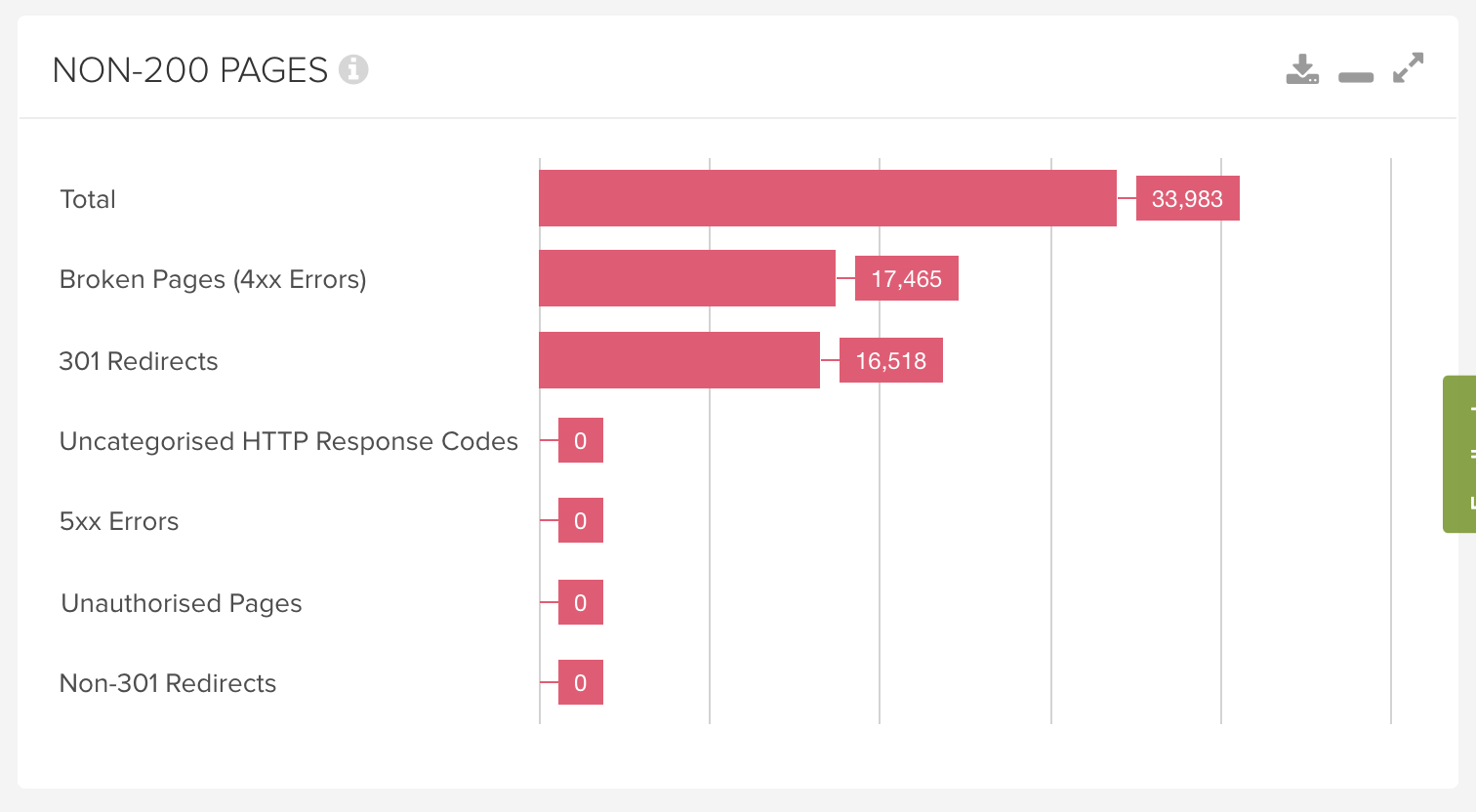

You can use Lumar (formerly Deepcrawl) to easily identify links to 301s and 404s at scale by crawling your site and using the non-200 page overview before diving into the 301 redirect & broken pages report to identify which pages are affected and where they are being linked from.

eCommerce SEO Mistake #4: Filters generating crawlable parameter URLs

Having many parameterized pages, often caused by filters and facets, can significantly increase the number of pages search engines have to crawl on a site.

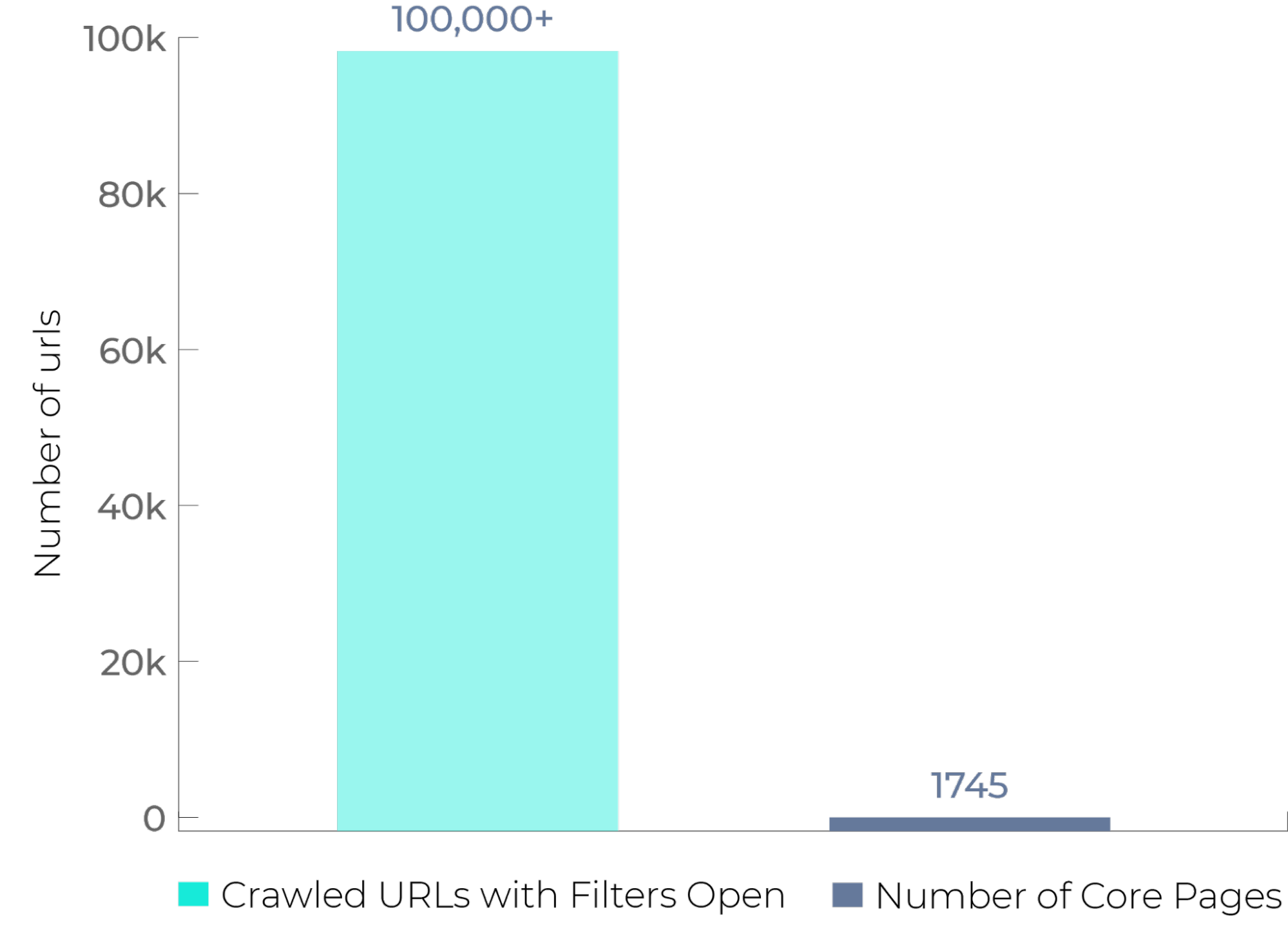

The following graph is a representation of a website that initially had its filters crawlable, compared to a crawl after the parameters were blocked in robots.txt:

As you can see, parameter filter pages were increasing the number of pages crawled by over 50x. (In reality, this number would be far more, but luckily we had set a maximum crawl limit in Lumar of 100k!)

If not properly configured, these parameter pages are close copies of the category page and therefore of very little value to search engines— they are pages we do not want ranking or competing with our main category.

Ensuring parameterized filters are blocked in robots.txt or internal links to these pages are “nofollowed” (or both!) will help to make sure they are not being crawled by search engines. If you want to first ensure these are removed from the index, a “noindex” should first be applied.

N.B. If you are indexing facets to target additional search terms, you may want to review this approach to ensure that relevant targeted parameter pages can still be crawled.

eCommerce SEO Mistake #5: Links injected by JavaScript

Many eCommerce companies use JavaScript to ensure the functionality of their sites. This can be used to great effect and many JS apps, plugins, and extensions can seriously improve the experience on an eCom site.

However, when analyzing a site, a crawl should always be taken with and without JavaScript rendering to better understand which URLs are being generated. Frequently, the rendered Domain Object Model contains URLs that are not present in the HTML, and so can be missed in a standard crawl that does not render JavaScript.

These URLs will be found by search engines when they render pages and can therefore be both crawled and indexed (in addition to taking up a resource-intensive rendering budget). More often than not, these URLs are also of little value, being duplicate, parameterized pages, or pages that serve no ranking value.

Once identified, these links should be removed from the Domain Object Model, or nofollowed / noindexed to ensure they do not introduce unwanted URLs to be crawled and indexed by search engines.

Is crawl budget an issue for search engines?

Although Google spokespeople have said that crawl budget should not be an issue and that Google can readily crawl millions of pages, as SEOs we still want to optimize the website experience for both search engines and customers alike.

Therefore, making sure that search engines more readily crawl important pages (the ones we want to rank) should help improve the site’s organic performance.

How to check your eCommerce website health

Crawling your website yourself to identify what search engines are identifying when they crawl your site is the ideal first step to understand where your eCom site is being bloated and how to make it leaner and more aligned with prioritizing the important pages we want to be presented to search engines.

Crawling your site (or sections of your site) with standard settings, and again with JavaScript rendering, can help to identify issues at scale.

Search engine webmaster platforms (such as Google Search Console and Bing Webmaster Tools) can also be very helpful to provide you with direction and an understanding of what search engines historically have had access to (or even currently be crawling) on your site. This knowledge can in turn help you to decide on your next steps to reduce eCommerce website bloat.