Last week I had the pleasure of attending and speaking at Eastern Europe’s largest digital marketing conference Digitalzone. Hosted by the amazing team at ZEO in Istanbul’s Radisson Hotel, some of the industry’s most influential speakers took to the stage to share their knowledge.

If you weren’t lucky enough to attend, never fear! I’ve taken notes on some of the talks from some of the conference’s best talks to keep feelings of FOMO to a minimum.

Larry Kim – Unicorn Marketing: Getting 10x results across every marketing channel

Talk Summary

In this session, Larry Kim, shared his top growth marketing strategies and processes for getting 10-100x more value from your marketing efforts, packed with practical examples and advice for results-driven marketers.

In keeping with the chatbot theme, you can download Larry’s slides through Mobile Monkey’s very own chatbot here.

Key Takeaways

How should you be focusing your marketing efforts?

Earlier this year, Larry sold Wordstream for $150 million. Larry’s popularity is partly due to being “a person to follow” in the marketing space back when there was less noise and this community was less developed.

Larry also attributes some his success to not becoming fixated on a particular tactic. His tactics change regularly, but his strategy has always stayed the same. So, what should you be focusing on in 2019?

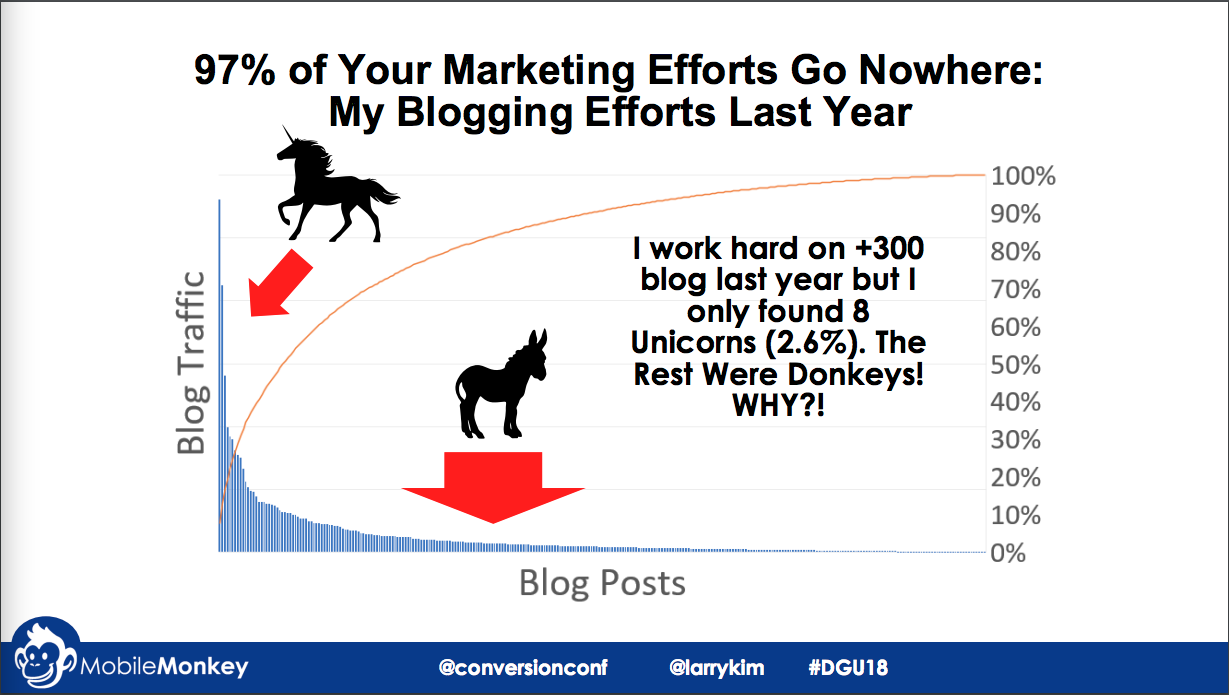

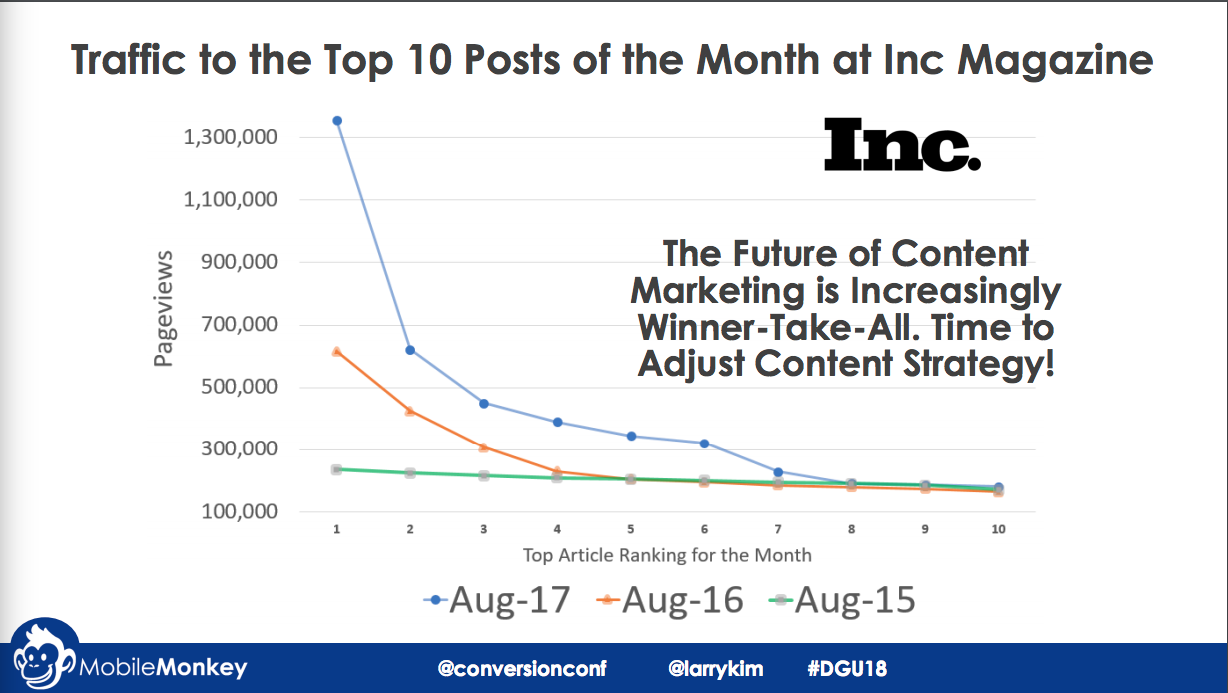

97% of your marketing efforts go nowhere. Last year Larry wrote 300 articles, of which 8 were unicorns (exceptional performers) and 292 were donkeys (everything else). Facebook and Google have changed their algorithms so that they reward unicorns and punish donkeys. In 2015, article performance was like communism, now it’s much more a case of winner takes all for the top two results. You can see a similar trend for Facebook shares.

As a result of this shift, you need to change your marketing strategy to align them with Google and Facebook’s algorithms. You need to understand the difference between donkeys and unicorns and to do that you need to try things out.

Larry posted a lot of Facebook status updates to see what ones work, he could then produce more of those and less of the ones that didn’t work. It’s the same with blog content and campaigns. Focus on your top 3% and do more of that.

Finding unicorns

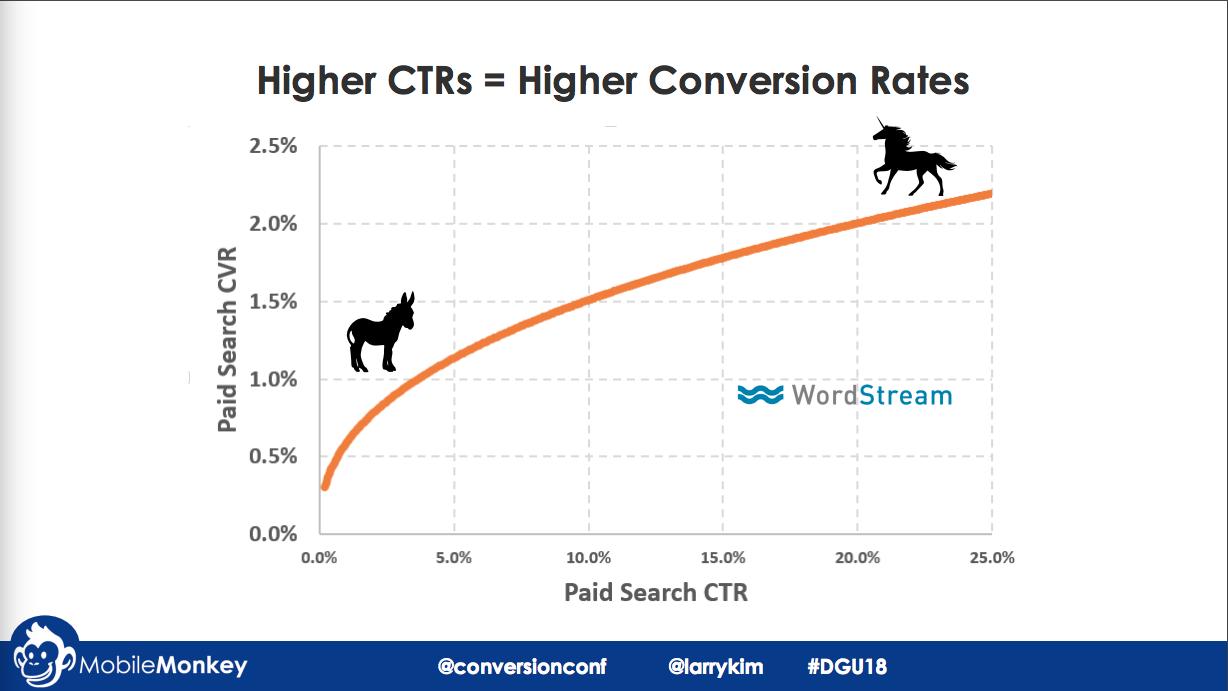

The key to Adwords has always been about machine learning, to determine quality based on click-through rates. It’s the same for social posts, you pay more for those ads with worse CTRs.

In organic search, click-through rates make a big difference. Larry’s research found that better ranking pages had higher click-through rates from month to month.

Are click-through rates related to engagement? If you can raise click-through rates in paid search, you will see a higher conversion rate. If you can get people excited about your site then they’re going to be more likely to convert.

All unicorns have unusually high click-through rates. That’s the signal used by all of these platforms to decide whether we get more or less exposure.

Unicorn/donkey detection

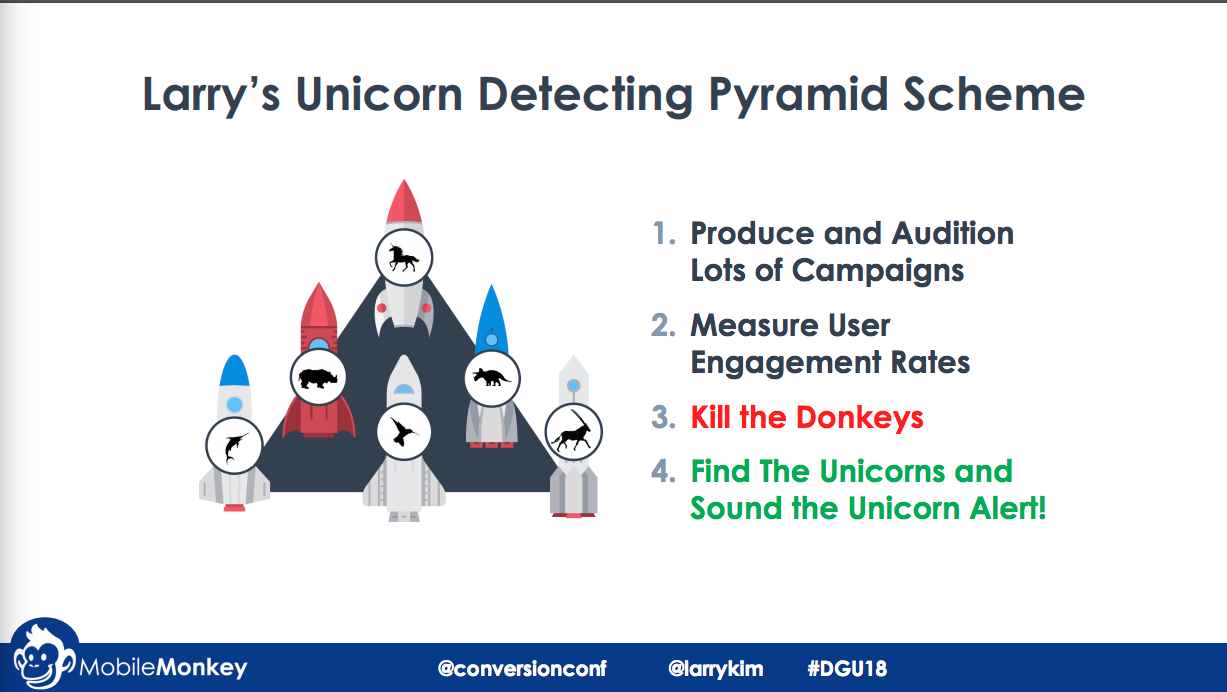

Lots of people think they have a unicorn but they don’t. Here’s how you can decide:

Audition a lot of campaigns, posts, etc., measure the user engagement rates and kill the donkeys – don’t keep pushing something that isn’t working.

What’s a good engagement rate? It’s a relative differentiation. Take all campaigns and rank them in descending order of click-through rates and look for outliers in the top 2%.

Sounding the unicorn alert

Larry wrote a post called “5 Big Changes Coming to AdWords”, which was off-the-charts in terms of traffic and engagement. Larry recommends throwing away your marketing calendar and sounding the unicorn alert so you can replicate this success in other places. It’s already worked in one place so it’s likely to work well in another. Think about ways you can replicate this success with more in-depth spin-off blog posts, infographics, webinars etc.

Don’t be afraid to repeat something that’s worked well. Larry put on the same webinar five times with the same title and content and got 5,000 registrations for each of them. You don’t necessarily need to change the formula for something that works.

Larry recommends not having a monthly PPC budget but a unicorn slush fund which you can use to go all-in when you have a unicorn on your hands.

Larry’s unicorn brand hack

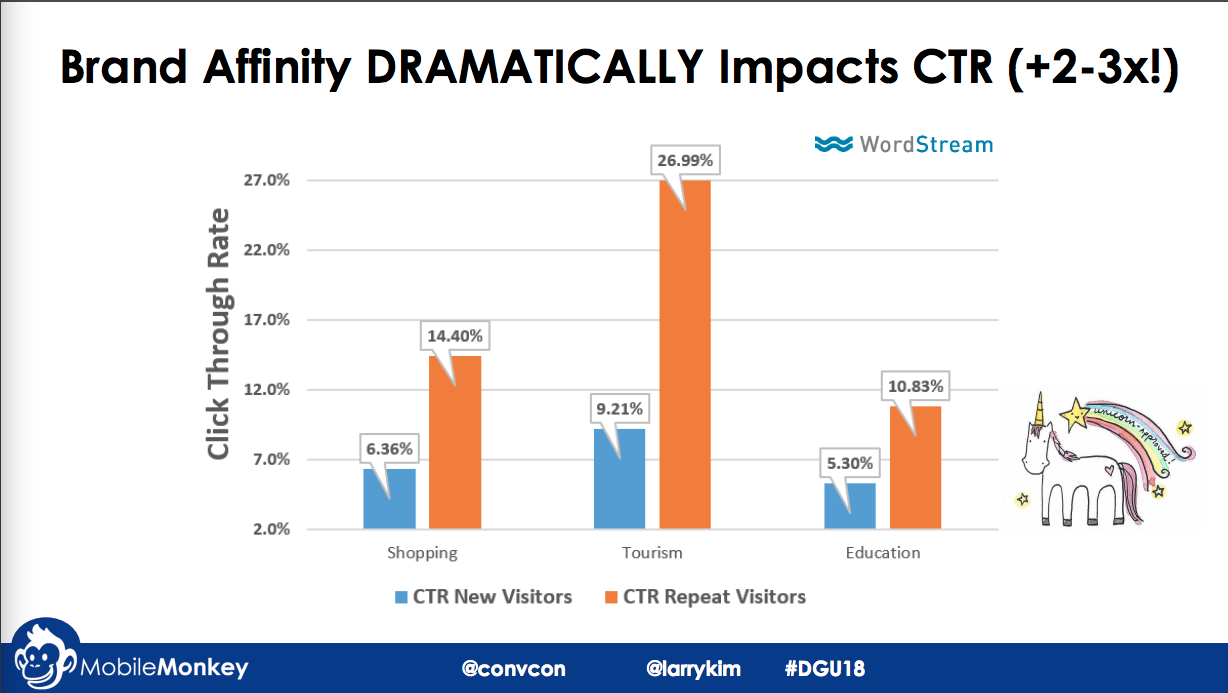

Larry looked at the click-through rates of new versus returning visitors (low versus high brand affinity) and found it was massively different. Click-through rates are much higher when a person is familiar with your brand. You need to create brand affinity early on in the funnel so they are more likely to click on you when they are ready to buy.

Use Google Analytics User Explorer and Facebook and Twitter Audience Insights to find out about demographics and interests and create content to cater to your top audiences, even if it isn’t necessarily your niche. For example, 70% of Larry’s audience is interested in entrepreneurship so he creates content to cater to them to create a positive brand affinity.

Facebook messenger and chatbot marketing

There are more daily users on messaging platforms than social media, but only 1% of marketing is spent there. How can we take advantage of this new channel to grow your business?

Messaging is an augmentation of email marketing, but you can send your audience surveys within chat and push notifications. Larry thinks chat blasting is the holy grail for the next few years.

With tools like Mobile Monkey you can chat blast content to your messenger contact list. It’s like email marketing, but way better because you do things, like ask follow up questions. Larry doesn’t think chatbot blasting is spammy because the open rate is very high, 60% compared to 20% with email.

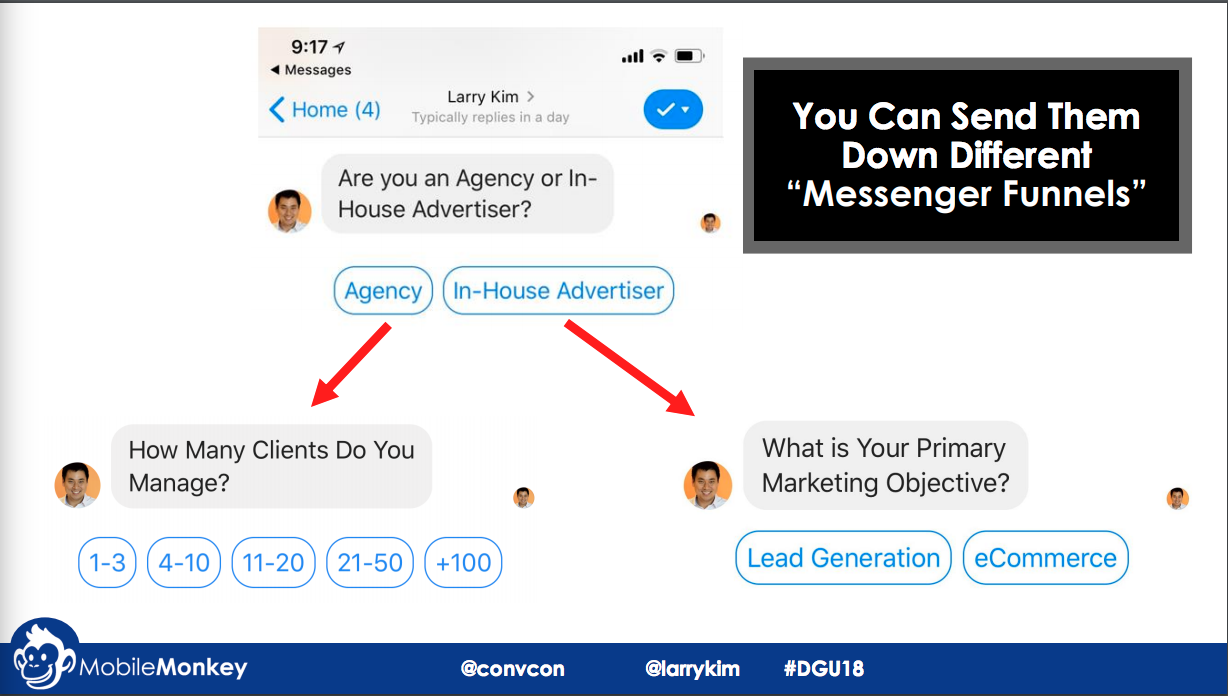

A benefit of messenger communications is that you can layer in dynamic content with multiple choice questions and create segments e.g. to find out whether they’re in-house or agency side. You can then put these people into segments and target them with more relevant messaging in the future.

It’s can be easier to fill out forms because inputs can be one-tap buttons. You can also layer in AI, so the intent behind user questions is analysed and returns responses based on specific keywords. You can also look at unanswered questions and refine the chatbot responses over time.

Growing your messenger list

The olden days were all about collecting emails. It doesn’t work anymore because open rates are so low and the same has happened with organic social. The new game in town is getting people to message your business, so they opt-in to future communications.

Attach auto-responders to your posts so it starts a conversation. For example, Larry sent an offer to learn about increasing social organic reach to people who commented on a Facebook marketing post. Try and get more feedback in the form of comments, so you can start a conversation.

Larry recommends going back to old landing pages and adding a “send to messenger button”, installing messenger chat on your website so people automatically opt-in as soon as they say hello. Everyone that clicks “send message” now becomes a contact so now you can generate leads for $3 rather than $300.

People prefer communicating on messaging platforms, this is a major shift but marketing hasn’t caught up.

Bartosz Góralewicz – The Aspects of Technical SEO in 2018

Talk Summary

The ever-increasing speed of evolving technology has put technical SEO in a constant state of chaos. Bartosz Goralewicz cut through the noise to reveal a concrete look at the current state technical SEO, how you can leverage technology as a core growth driver, and point out the aspects of technical SEO that could make or break you in 2018.

Key Takeaways

Current state of technical SEO

There have been a lot of changes in SEO and it’s been messy – a lot of people don’t get technical SEO. The problem lies with SEOs who need to get their shit together – not Google. As SEOs. we need to acknowledge and adapt to changes.

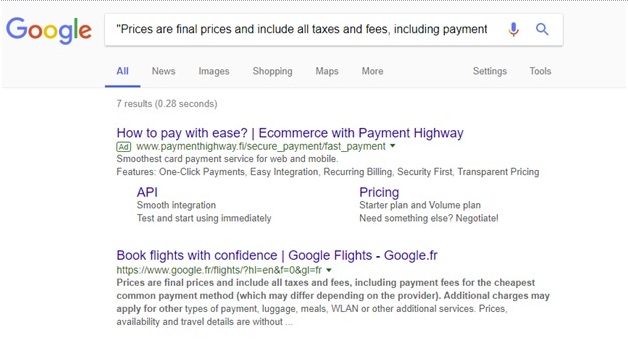

No one believed that Google was going to take over search in 1998. After 20 years, you’d think that they would know how to index their own sites properly. With Google Flights, most of their pages aren’t indexed in Google and they don’t appear in mobile-friendliness testing.

Big sites suffering in search

However, there are a lot of big players who are also not up to date and suffering in search. Hulu doesn’t work without JavaScript and that has had a bad impact on their search visibility. Hulu also has different titles in the source code compared to the DOM. These issues have meant Hulu has started to lose out to Netflix when they previously were well ahead.

USA Today is another big site with big problems. When you run USA Today through the Mobile-Friendly Test it shows it is invisible to Googlebot Smartphone. With Delivery.com, all of their pages are indexed, except for their homepage.

Why are all of these sites having problems?

Technical SEO in 2018 is much more complex and the pace of growth means that it is only going to get more complex. The biggest factors to consider are:

- Mobile-first indexing

- JavaScript indexing

- Web performance

The main limiting factors on the growth of these technologies are computing power and slow connection speeds.

Javascript indexing

Web development has evolved since the 1990’s; advancing from HTML-only to full JavaScript sites.

Google do their best to embrace new technologies like JavaScript. As an SEO you need to understand the two waves of indexing. At Google I/O John Mueller and Tom Greenaway explained the two waves of indexing with the classic crawling and indexing process and now rendering as a second wave of indexing. Now, if Google sees there’s a lot of JavaScript they will render the page in the second wave of indexing when resources become available.

In the first wave of indexing the HTML, canonicals, metadata and are crawled and indexed. In the second wave, Google indexes the rendered version of a page with the extra JavaScript dependent content. You can see the difference between what is seen in these two stages by comparing view source (first wave) and inspect elements (second wave).

Rendering a page is expensive for Google, as it requires a lot of computational and CPU power – which is expensive. Processing JavaScript is ~100x more expensive than processing HTML.

Web Performance

Google has started using performance as part of their search algorithms. We tend to measure performance using fast connections, with a good CPU. SEOs tend to have higher-end devices than your average user with better processors that can process JavaScript faster.

CPU and network impact performance. 45% of connections occur over 2G or 3G. So we can assume the average user is using a slow phone over a slow connection. Looking at the CNN site for example, you would see a 9-second difference in the load time between the new iPhone and an average phone.

Why is performance a ranking factor now?

There is a need for real user metrics and objective crowd measured metrics, which is where the Chrome UX report has come in. Google has published data from Chrome which shows how quickly a site has loaded. You can see these metrics in PageSpeed Insights and all of the data is available via the Chrome User Experience Report (CRUX).

First Meaningful Paint (FMP) is difficult to measure because it varies from site to site. For example, the FMP for The Guardian is going to be at a different point compared to Giphy. Google hasn’t yet come up with a standardised definition.

User experience metrics now look at the different stages of loading. Standard speed tools aren’t good enough because load times are dead.

In an example of web performance done right, Netflix removed client-side react.js and replaced it with plain simple JavaScript. They only used React for 3 things. Removing react.js resulted in a 50% performance improvement. Compared to Hulu, Netflix shows you content right from the beginning, whereas Hulu loads everything at once right at the end of the loading time.

As SEOs we need to look at the devices users use, network speed, caching, browsers used.

Kevin Richard – How to rank on Google Turkey? A machine learning-based ranking factor study

Talk Summary

Kevin presented the results of an original piece of research, looking at how well linking metrics can predict rankings in organic search. Not satisfied with simple correlational techniques, Kevin trained a predictive model to better understand the extent to which linking metrics can predict rankings.

Key Takeaways

Not another ranking study

Kevin ran a ranking factors study, but a very specific one on Turkish search results. He wanted to know if linking metrics alone can predict rankings in Google.com.tr.

For the research, Kevin looked at results for 100k non-branded keywords and the top ranking results for the first 100 positions. He then looked at the linking metrics for all of these pages using Majestic data. With this data, Kevin wanted to go further than looking at regression testing, as this approach isn’t enough to properly understand ranking factors.

The Methodology

The following link metrics were used from Majestic’s database:

Linking metrics included Trust Flow (backlinks from high-quality domains), Citation Flow (all backlinks), TF/CF (a spam index) and the number of referring domains as part of the study.

Kevin decided to grab the top 100 URLs for each of the 100k queries and looked at the linking metrics for these, ordering them according to the domain’s Trust Flow rank. With this, the dataset included 10 million records.

After this, Kevin ran the study three different times and divided the data between a training and testing set (80/20). Kevin wanted to run an algorithm against training data and then use that on the testing data.

Kevin wanted to go further than a correlation study so decision trees were needed. An algorithm was developed that contained 50 decision trees using Python. These decision trees gather together and “vote” for the correct answer. However, an algorithm needed to be trained using downsampling (by taking risks) and to raise the penalty for each mistake (to let the algorithm know that some mistakes shouldn’t be made).

Getting the model right was a difficult task because as you need to avoid underfitting (not explaining variance well enough) and overfitting (training the algorithm too well so that it knows the training set by heart).

How well does this model perform vs. total randomness?

The model had a 98.25% success rate for predicting the top position, 92% for the top 5 positions and 83% for the top 10 positions using linking metrics alone. The biggest weights in top 5 positions were the number of dofollow referring domains and the URL Citation Flow. The random value Kevin inserted featured as a very low predictor of ranking – which is good! For the top 10 positions, the weightings are less clear.

Looking at the SHAP values, randomness is a small factor and no. referring domains is a useful factor in the prediction of rank.

Takeaways

Don’t aim for a specific number of backlinks compared to your competitors, you need to monitor your environment. Earn, don’t build backlinks. Get link equity to your URLs with good internal linking. Host your message on high authority sites.

Barry Adams – SEO for Google News: Get Rank at the Top

Talk Summary

Barry Adams provided tips and insights for publishers to get the maximum traffic from Google News.

Key Takeaways

Barry works with a number of big publishers and thinks that Google News is an interesting vertical that needs to be better understood.

What is ‘SEO’?

Search engines are about information retrieval and you can be read about it on the Stanford site. It’s important to grasp some of the concepts, not necessarily the hard maths.

Each search engine has a crawler, an indexer and a ranker. This is how Google works in a simplified pipeline.

Google News is slightly different because it is so fast moving. Publishers need to give Google extra tools to rank in News.

An introduction to Google News

Google News was in Beta in 2002, launched in 2006, integrated with universal search in 2007 and AMP was launched in 2015.

Google News has been revamped recently. They say the AI decides what stories are displayed but Barry says that’s a way for Google to hide behind the code.

Half of a news site’s traffic comes from Google search, not News (2%), but that is the case because Top Stories shows for 11.5% of all Google queries. It is very rare for publishers not in Google News to show in Top Stories. Mobile news carousels are also very common. Google is more inclined to show non-Google News publishers in mobile carousel compared to Top Stories on desktop and 95% of stories in the mobile carousel are AMP pages.

AMP is trying to reimagine the web in their own image, not necessarily make it faster. It’s a restrictive standard and it is all or nothing – either have valid AMP pages or you don’t. The problem is that you have to play by Google’s rules whether you like it or not as a publisher. The Irish Times has gained ground on Belfast publication since implementing AMP.

For Google News, you have to submit site into their index so you can get in Top Stories and the mobile carousel. Getting approved as a Google News publisher is an extensive process and you have to be “proper” news, not a news section on a commercial site. You need original content, multiple updates daily, have multiple contributors, editorial specialty and niche news sites are also welcome. Technical requirements are that you need a News XML sitemap, AMP pages, and NewsArticle structured data. All this allows for quick indexing.

Google has banned 200 publishers of late. Google is trying to keep the News index clean and get rid of fake news.

Optimising for Google News

Editorial speciality is the biggest factor for ranking in News. Write about a topic regularly then you’ll be seen as an authoritative source and appear more regularly for relevant queries on that topic.

Headline keyword matching is the single most important on-page ranking factor. You need keywords from the search query in your article headline. The keyword needs to be in the first six words of your headline.

Puns don’t work for headlines in News, algorithms don’t have a sense of humor. 110 character is the limit for headlines, else it will break the structured data.

Recent is an important ranking factor. Google prefers recently published or updated articles. You can modify the content so that it looks more recent and so it will reappear in Top Stories. However, you can get kicked out of the News index for this behaviour, if it is done too much.

Content factors

Articles should be more than 80 words (although Barry has seen 200-word articles rejected) and not too long. Google decided to deprecate the News report in Search Console, which Barry is positively furious about.

The most common error is “title not found”. This error often occurs because of special characters present in the title, which Googlebot isn’t able to extract and therefore the page won’t be indexed.

With Google News, it is the crawler that does the heavy lifting with first stage indexing – that’s why pages are so quick to be indexed. Recency means that there needs to be a focus on speed.

Publishers need to make sure the HTML source code is clean so that the HTML parser in Googlebot can easily extract information. See Barry’s common crawl errors on Google News on State of Digital.

Leveraging Google News for regular SEO

News websites also dominate regular organic results and it’s all about links. Publishers have very powerful link metrics. They’re part of a self-reinforcing cycle of link building.

It’s the article pages that get the links, so publishers need to leverage those to benefit category pages. Make sure you have article tags on article pages, so these category pages get a slice of the link equity through internal linking.

The Mail Online has pioneered this process but overdid it with too many internal links to category pages, they have over-optimised. The Sun removed their paywall and were smarter with internal linking, so have made up ground in terms of search visibility.

Newspocalypse

Tabloid news sites were slaughtered on 9th March 2018, when traffic went down by half. The reason was a core algorithm update and it looks like what Google did was give visibility to sites that have a defined topical focus. Barry called it the “stay in your lane” update.

Google now tends to gives visibility to topically relevant sites e.g. music focused results for music-focused websites. This is the case even if the content on other sites is really good. Google will only let you rank in certain topical areas – this a big game-changer.

What’s coming?

Speakable markup denotes when certain parts of a site can be read out by an Assistant. This development is Incredibly powerful as it allows you to tap into voice search. Google is moving quickly with speakable markup as they want to become more useful than Amazon’s Alexa.

NewsArticle markup is being expanded to more accurately interpret what you’re writing about e.g reportage, review, background, analysis.

NewsGuard was started by journalists in the US. Journalists manually look at news sites to make sure they adhere to journalistic standards, as a part of Google’s attempt to remove fake news. If they do adhere, they get a green mark in the SERPs.

Gary Illyes – How To Make Google Image Search Work For You

Talk Summary

Gary Illyes talked about how Google Images works and how to optimize your content to help your website do better in Image search.

Key Takeaways

Gary sees a lot of people missing out on image traffic and it’s a big untapped opportunity.

How does Google Images work?

All search is split into crawling, indexing, and ranking. The most important thing is that Google can see images in the HTML, using the image’s source to send to indexing. In indexing, Google then categorises images by type.

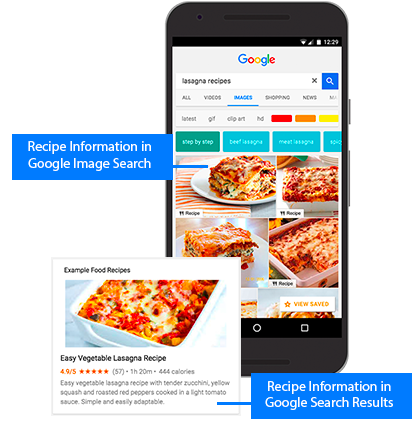

You can mark up images with product schema, that will then be picked up and displayed in image search e.g. recipe markup.

How can you do better in Google images?

The first thing that SEOs usually miss is images being blocked by robots.txt. If Googlebot isn’t able to access your images, then it won’t be able to index them. You need to identify if images are being blocked and you can use the Mobile-friendly test to do this. If images can’t be reached then you can check robots.txt to see if they are blocked.

How do bots find images?

Image sitemaps are the second most important discovery method for images. An image sitemap will allow images to be indexed much faster than through normal crawling. Gary recommends reading Google’s documentation on building image sitemaps.

Alt and source attributes in the IMG tag are required for it to be indexed in Google Images.

If you can include better file names that describe what the image is about that will help Google better understand images, although this may not be feasible for sites with large volumes of images. Generic alt attributes aren’t great for being indexed in image search, so be more descriptive when you can e.g. “chicken burrito with lime pieces” is better than “food”. There’s no character limit for alt attributes but use them to describe precisely but concisely.

Adding structured data to your pages and images may result in having your images badged, thus standing out more in Image search. The image title will almost always be used in Image search.

Google have recently updated their Image Publishing guidelines, so Gary recommends having a read through these.

Cindy Krum – Mobile-first Indexing or a Whole New Google

Talk Summary

In this session, Cindy spoke about what ‘link equity’ might mean in a mobile-first index world, why you don’t need a URL to be indexed anymore, language & keyword indexing vs entity indexing, what chatbots have to do with voice search and Google Actions and Google Assistant.

Key Takeaways

Google and innovation

SEOs are missing the point with mobile-first indexing. With the innovation S-curve, you start to hear about something and then it takes off in a big way. Minor and incremental changes over time result in rapid growth. Then you have new, replacement technologies which have their own S curve happening simultaneously.

If you’re ignoring mobile now, you’re not a good SEO. Half of searches aren’t on desktop and half of searches on mobile aren’t resulting in clicks. Above-the-fold is now dominated by Google hosted assets and voice-first devices have really taken off in the past year or so.

What is Google’s aim?

Google wants to organise everything. They want to rank more than just websites e.g. audio, businesses, podcasts, SMS, photos, music – it’s all information. All of these things don’t necessarily have URLs.

Google started by using link graph but it’s messy, and Google is trying to move away from it. Cindy sees mobile-first indexing as a new organisational methodology.

Indexing is the way you organise information, like the Dewey decimal system, so mobile-first indexing is organising the information slightly differently. Cindy thinks that the shift to mobile-first indexing is about organising information according to the knowledge graph.

Increased focus on entities

Knowledge Graph items have different numeric IDs. For example, Taylor Swift is a knowledge graph entity and has a different numeric ID, as do each of her songs.

At the centre of the change are entities. Entities make Google’s job easier because they are language agnostic. Image search has moved beyond keywords, it is language agnostic – it is the same regardless of the language that describes it.

Google was built in English but it takes longer to make updates in other languages because Google receives less feedback. Google wasn’t using heuristics to understand entities, but it is now leveraging its knowledge of other languages to further understand entities.

Google Cloud Natural Language API

The Google Market Finder tool analyses site and returns how it categorises what it thinks the site is about. In addition, Google Cloud Natural Language API and their tool tell us what it knows about something and it’s connection with other entities.

Cindy and Mobile Moxie have done some research on how the Cloud Natural Language API works in search and you can read about it in their five-part series.

Entities are universal ideas or concepts and they don’t require links or websites.

The device context is massively important to provide you with the right content. You wouldn’t want to listen to a podcast from a watch, for example. This is where Google Assistant comes in to connect devices e.g. Chromecasting to another device.

We’re used to Knowledge Graphs showing official domains, but they are now deeplinking to pages and showing search results within a local pack. More personalized experiences are easier to monetise.

Don’t get locked into thinking you can only optimise websites. There are many different things that rank without a website.

Interested in Google Data Studio?

As well as listening to some great talks, I had the honor of speaking on the Digitalzone stage about how you can automate SEO reporting using Google Data Studio. If you’re interested in finding out more you can find my deck and accompanying guide here.