You may have seen the recent news about the enhancements we’ve made to our crawler! These exciting improvements not only make our crawler the fastest on the market today, but also brings Lumar even closer to Google’s own behaviors, and allows us to increase the breadth and flexibility of data we will be able to extract from pages. Let’s take a closer look.

Increased Crawl Speeds

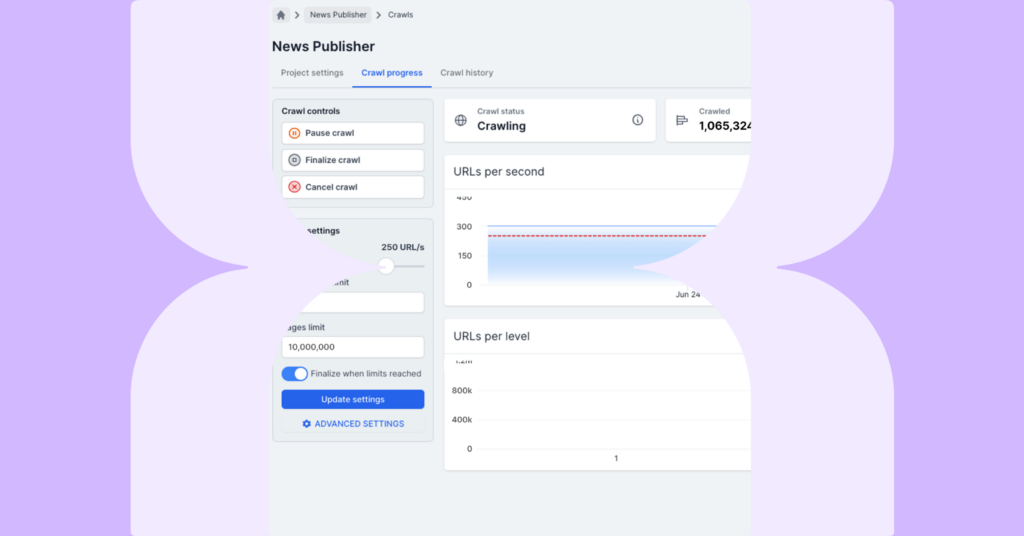

By using the latest serverless technology, we’ve been able to increase crawl rates to the fastest possible speeds. During our field testing, we achieved speeds of 450 URLs per second for unrendered crawls and 350 URLs per second for rendered crawls. By completing crawls in a faster time, data is made available for processing faster, meaning you get the insights you need as quickly as possible.

Important Considerations

While the enhancements we’ve made to the crawler mean that it can effectively crawl as fast as possible, there are some important considerations that need to be taken into account. Crawling at too fast a rate means your server might not be able to keep up, and your site will experience performance issues. To avoid this, Lumar sets a maximum crawl speed for your account, which we can update for you to enable faster crawling speeds. However, there are some important considerations to keep in mind:

- Before you request any update to your crawl speed, it’s essential that you consult with your dev ops team to identify what crawl rate your infrastructure can handle.

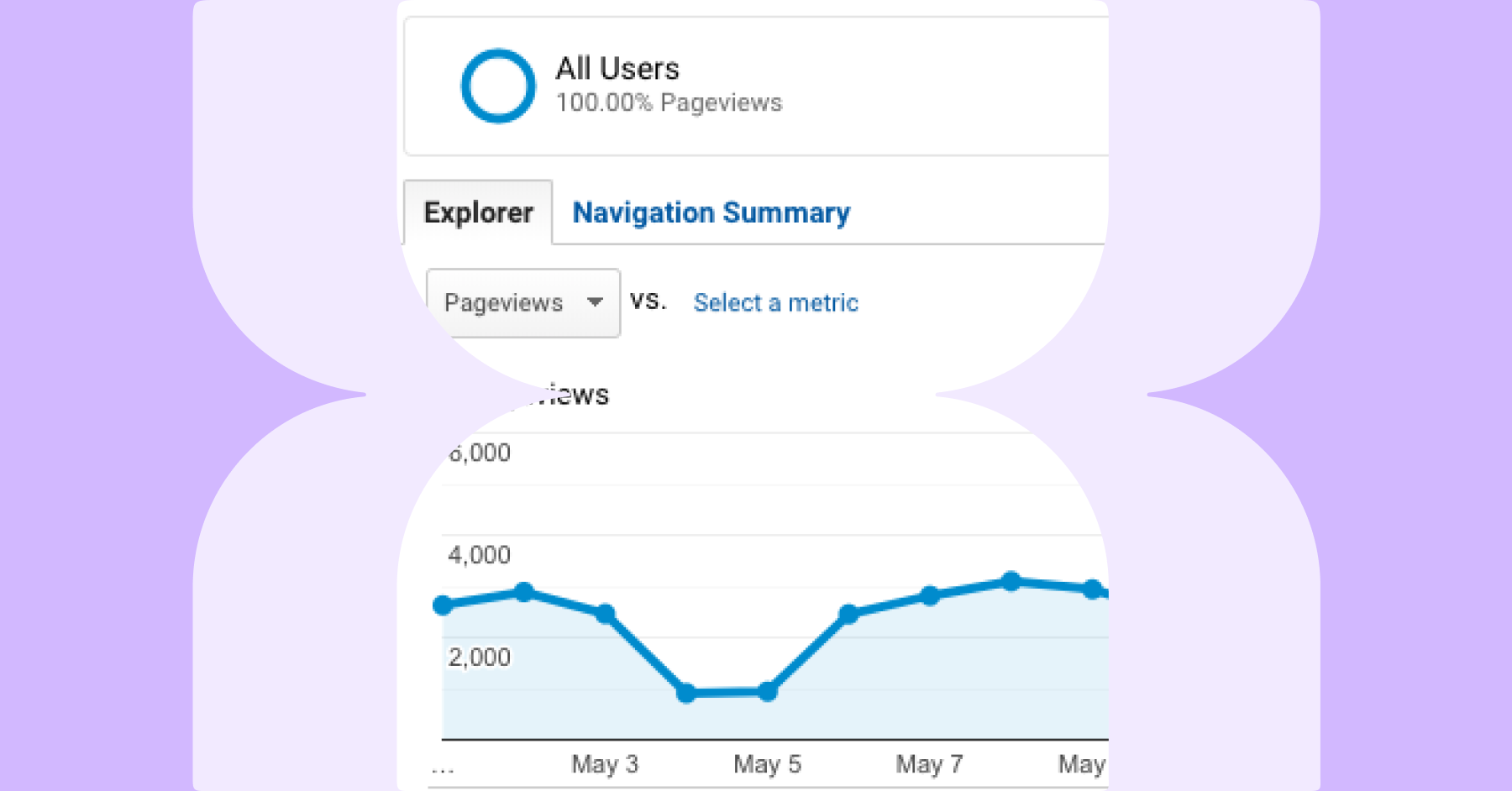

- We always recommend that you schedule crawls for times when traffic on your site is low (e.g. between 1am and 5am). If you want to crawl above 30 URLs/s, you should be safe to do this at times of low traffic if you are receiving around 50 million views per month. As we say though, please ensure you consult with your dev ops team.

- To ensure efficient crawling, the IP address of Lumar’s crawler should be whitelisted by your dev ops team. Please ensure they whitelist both 52.5.118.182 and 52.86.188.211.

Closer Alignment to Google Behaviors

We’re taking full advantage of Google’s industry-leading rendering and parsing engine to align as closely to Google behaviors as possible. This has some implications for customers once the enhanced crawler is enabled:

- We use Chromium to fully request, create DOM and parse metrics for both JavaScript enabled and disabled crawls. The actual HTML used to extract metrics can be found in Chrome (inspect > elements) rather than ViewSource. You will need to turn off JS rendering in the browser to see the pre-rendered DOM like for like.

- Some characters are now stored and displayed differently, replicating what’s seen in Google Search Results more closely and making data easier to interpret. You might therefore see some changes in metrics, including h1_tag, page_title and url_to_title.

- To follow Google’s behaviors, noindex in robots.txt is no longer supported. Pages noindexed in robots.txt will now be reported as indexed.

- We now have a limit of 5,000 links per page, in line with the rough ceiling estimate provided by Google. Links exceeding the limit will not be crawled.

Data Improvements

We’ve also made data improvements to reflect Google practices and enhance the insights you get from Lumar, including:

- Improved metrics due to word_count and content_size being calculated from body only. You may see an increase in Thin Pages as the reported content_size metric will be slightly lower. Duplicate body/page reports may also be impacted due to slightly different content used to determine duplicate content. Some other metrics may also be affected, such as content_html_ratio and rendered_word_count_difference.

- We’ve cleaned up whitespace in anchor text to remove the risk of unique links caused by whitespace differences. You may see a number of added/removed unique links on the first crawl, due to different digests for anchor texts.

- The calculation of meta_title_length_px metrics has been improved. You may see slightly higher values for this metric and may see an increase in Max Title Length Report.

- \s, \n and \t’s stripped from meta descriptions to ensure consistency in max length reports. You may see differences in the meta_dexcription and meta_description_length metrics and see less pages in the Max Description Length Report.

- Previously, 300, 304, 305 and 306 were treated as a redirect if a_location was provided. These are now always treated as non-redirects, which may result in a drop in redirects following upgrade to the enhanced crawler.

- Meta_noopd, meta_noydir, noodp and noydir metrics have been deprecated. The metrics still exist, but are defaulted to FALSE value.

- All acceptable fallback values are reflected in the valid_twitter_card metric calculation, which may result in some differences in this metric.

- We’ve improved the DeepRank calculation by taking into account both HTML and non-HTML pages.

Other Improvements

In addition, we’ve made a few other improvements, including:

- Structured data metrics are now available on non-JS crawls.

- You can now run crawls on regional IPs and custom proxies with JS rendering on (including for stealth crawls).

- We have new metrics for AMP pages which may mean you see more pages appearing in AMP page metric reports. The new metrics are: Mobile_desktop_content_difference, mobile_desktop_content_mismatch, mobile_desktop_links_in_difference, mobile_desktop_links_out_difference and mobile_desktop_wordcount_difference.

- The maximum page size limit has been removed, allowing reporting on whatever Chromium can process within the timeout period. This may result in new pages (and additional pages linked from those pages) being crawled.

- We are now reporting on unsupported resource types. This provides additional reporting, including http status, content type and size. We also report on the non-html pages found in the crawl report.

- We’ve increased the number of redirects we follow from 10 to 15. You’ll now be able to see longer redirect chains in reports (e.g. Redirect Chain).

- We’ve improved processing speeds, to reduce the time between crawls completing and reports being available.

- Found_at_metric of start URLs is reported now as empty. Previously the found_at metrics was reported as the first URL it was found at.

- Finally, we’ve improved the redirects_in_count to match the actual redirect that is pointed to a given URL.

Once the enhanced crawler is enabled, there are also a few final things to be aware of:

- The order in which URLs are crawled during web crawl has changed. When crawling partial levels, the URLs may change the first time a crawl is run with the enhanced crawler.

- Previously, if a project didn’t have any custom extractions set up, the Extract Complement Report would include many URLs flagging that no matches were found. Now, URLs will only be reported if custom extractions exist within the project. Users may therefore notice a drop to zero as a result of this for affected projects.

- Content_size is now calculated in character length rather than bytesize. This can affect content that contains non-UTF characters which will now be reported as 1 character, rather than 2 bytes.

- We’ve fixed a bug that meant meta refresh redirects may have previously been reported as primary pages. You may see a small drop in the number of primary pages as a result.

- The crawl rate is actually the processed URL rate and it’s possible for a large number of URL fetches to be completed simultaneously, resulting in a higher number of URLs processed. While the crawl rate may show higher than the desired setting, the actual crawl rate setting will not be exceeded.

- Malformed URLs are no longer included in links count. You may see a lower link_out_count metric value in the reports.

- Any URLs that cannot be handled by the Chromium parser are now considered malformed, which may result in some differences in the Uncrawled URLs report.

- We’ve changed the way some reports that require other source work (e.g. Pages not in Log Summary, Pages not in List, Primary Pages not in SERPs, Indexable Pages without Bot Hits). These reports will only be populated if the required source is enabled. For example, the Pages not in Log Summary report will only contain URLs if you have Log Files sources enabled in the project.