After many months of waiting, and a few leaked screenshots, the new beta version of Google Search Console started it’s long, anticipated rollout this month.

As not everyone might not have had the time to go through this new iteration in detail, I thought it would be useful to take a look through what’s new and, just as importantly, what’s missing from the Search Console’s revamp.

Performance Report: Increased Date Range and Improved Filtering

Other than the updated interface, the most talked about difference in the new beta version is the rebranded Search Analytics report, now more broadly labeled “Performance”.

The Performance report features provide the same reporting functionality as in Search Analytics apart from a few changes:

Expanded Date Range

The new beta version now features 16 months (486 days) worth of impression, click, CTR and position data, as opposed to 90 days.

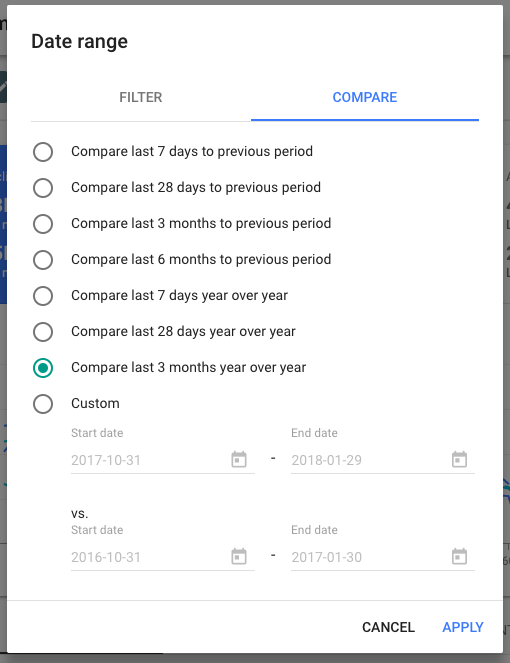

While there are options for aggregating this SERP data via the Search Console API, the updated date range is a welcome addition, that enables webmasters to detect seasonal trends and allows for year on year comparisons within the UI.

Similarly, Search Console is still limited to displaying 1,000 rows in of SERP and report data, meaning that it shouldn’t be used for a full site analysis. In this case, crawling solutions like DeepCrawl are a much better option for providing a comprehensive analysis of your site’s technical health.

One minor issue I did notice when comparing the last 7 days to the previous year is that the weekdays don’t match up, however this can be overcome by using a custom date range.

Improved Filtering

Some slightly subtler changes have been made to the reporting within Performance – for better and for worse.

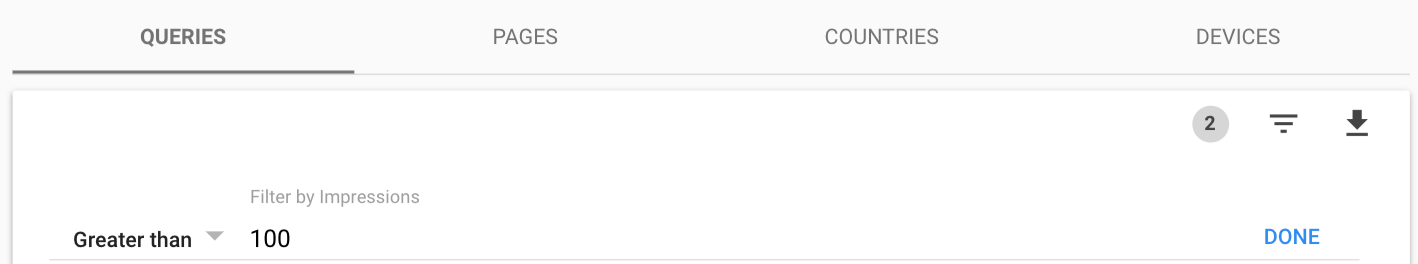

The beta version includes improved filtering that allows you to more easily dig down to the data that you’re interested in. By clicking on the Filter Rows icon in the top right of the report table you can manipulate the data by applying rules (equals/not equals and greater than/smaller than) for Click, Impression, CTR and Position metrics.

This addition could be used to better filter down SERP metrics for more important pages or queries. For example if you wanted to check changes in average position from month to month, you can compare the last 28 days to the previous period. Then set a filter so that the report only displays queries with more than a set number of impressions.

Disappearance of the Difference Column

One omission in the new version is that this table no longer features the Difference column, which, when sorted would handily show the biggest changes between any of the four metrics for the current and comparison period. Of course, these insights can be gained after downloading the data into Excel or from other SERP tracking tools but it’s a shame that this column has been removed.

Index Coverage Reporting

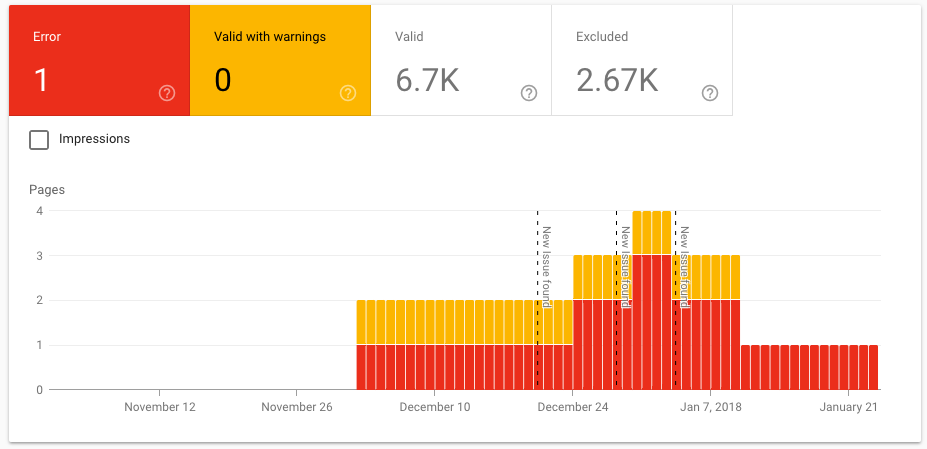

The reporting of indexed and non-indexed URLs is an area that has changed more significantly in the beta version. The Index coverage report replaces what was previously the Google Index section, and on the face of it, provides more detailed reporting regarding indexing issues.

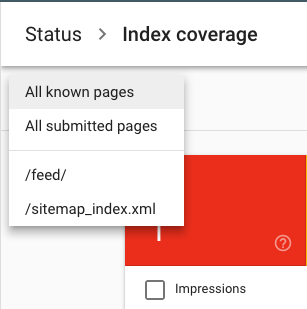

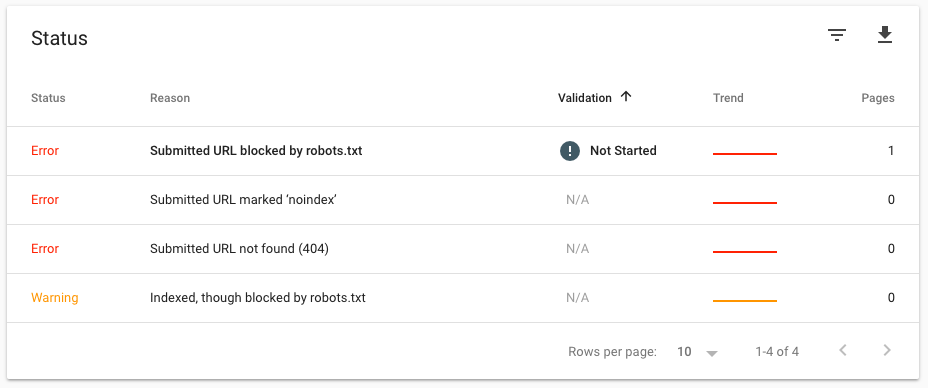

URLs in the Index coverage report are now split into four statuses (Errors, Valid, Valid with warnings and Excluded), which can be filtered by all known pages, all submitted pages and pages in sitemaps.

Most importantly – the Index coverage report includes more detail about the reason for these four statuses than provided previously. Here are the new flags that are available in beta:

- Errors: Redirect error, Submitted URL marked ‘noindex, Submitted URL blocked by robots.txt, Submitted URL marked ‘noindex’, Submitted URL seems to be a Soft 404, Submitted URL returns unauthorized request (401), Submitted URL not found (404), Submitted URL has crawl issue

- Warnings: Indexed, though blocked by robots.txt

- Valid: Submitted and indexed, Indexed, Not submitted in sitemap, Indexed consider marking as canonical

- Excluded: Blocked by ‘noindex’ tag:, Blocked by robots.txt:, Crawl anomaly, Crawled – currently not indexed, Discovered – currently not indexed, Alternate page with proper canonical tag, Duplicate page without canonical tag, Duplicate non-HTML page, Google chose different canonical than user, Page removed because of legal complaint, Page with redirect, Queued for crawling, Submitted URL dropped, Submitted URL not selected as canonical

Issues With the Index Coverage Report

Although this more granular reporting is useful in diagnosing reasons why pages aren’t indexed, there’s a frustrating lack of detail in the explanations of some of these new flags that make it difficult to take action.

Let’s take a look at a few examples:

Crawled – currently not indexed: The page was crawled by Google, but not indexed. It may or may not be indexed in the future; no need to resubmit this URL for crawling.

This explanation provides a description of Google’s actions but gives no insight as to why the URL hasn’t been indexed or if it will be. It is worth investigating pages in this report to identify the cause, perhaps it points to a quality issue.

However, of the URLs we’ve seen that fall into this report so far, all of them looked to be indexed following a manual check. This brings into question how useful this report actually is if it isn’t updated frequently enough to be current.

Discovered – currently not indexed: The page was found by Google, but not crawled yet.

Similarly, with this report, it’s hard to understand how this provides useful information. What use is it knowing which pages have been discovered but not yet crawled if the report isn’t updated frequently enough to give an accurate reflection?

Submitted URL has crawl issue: You submitted this page for indexing, and Google encountered an unspecified crawling error that doesn’t fall into any of the other reasons. Try debugging your page using Fetch as Google.

This report appears to be a catch-all for the crawl issues Google couldn’t categorise. Again, this a bit vague and not particularly useful in helping you understand the reason behind this issue. It may be that URLs in this report are HTTP response codes that aren’t recognised, but a clearer explanation is required.

While the reports in the old version of Search Console were less detailed, they at least had clear explanations accessible by clicking on the affected URL in the report. This is something that needs to be improved in the updated version of the tool.

Looking through the Index Coverage report you will also see that the ability to filter crawl errors by desktop, smartphone and news no longer exists.

Discrepancy in Index Count Across Versions

On top of these issues, the new version doesn’t provide a full count of indexed pages. In theory, adding together the number of Valid (indexed) pages and Valid pages with warnings should give the total number of indexed pages, but this number doesn’t align with the Total indexed figure in the old version.

Having checked across several properties, the new version of Search Console always seems to be reporting less indexed URLs than the old one, which is a discrepancy that could do with some clarification.

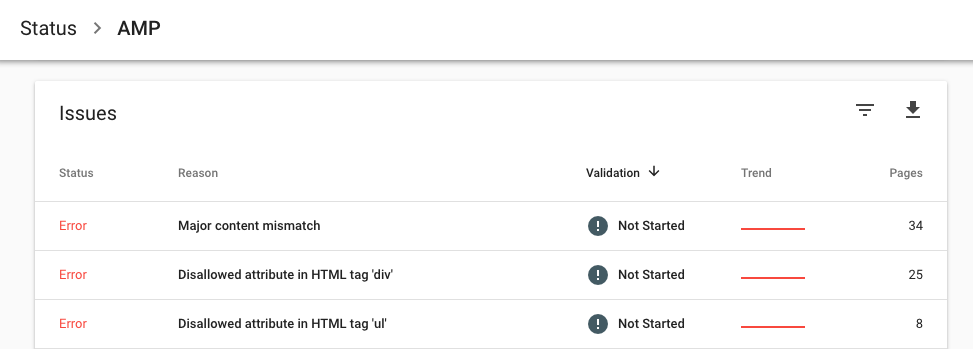

AMP Reports

The last section in the Status reporting is the Enhancements section with an AMP report detailing errors in a similar way to Index Coverage. This is largely the same as the Accelerated Mobile Pages report in the old version, although the priority column has been dropped in favour of Validation status and Trend columns.

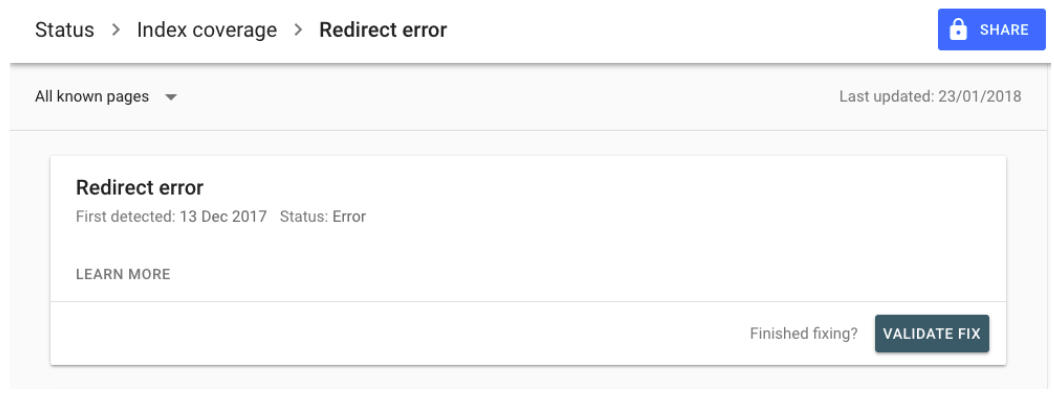

Validate Fix Feature

An interesting addition across the Index Coverage and AMP reports is the ability to validate a fix once a crawl or AMP issue has been resolved to re-submit it to be crawled. However, a downside of this is that it is only possible to validate fixes for groups of URLs with the same issue rather than on a URL-by-URL basis – which limits its flexibility.

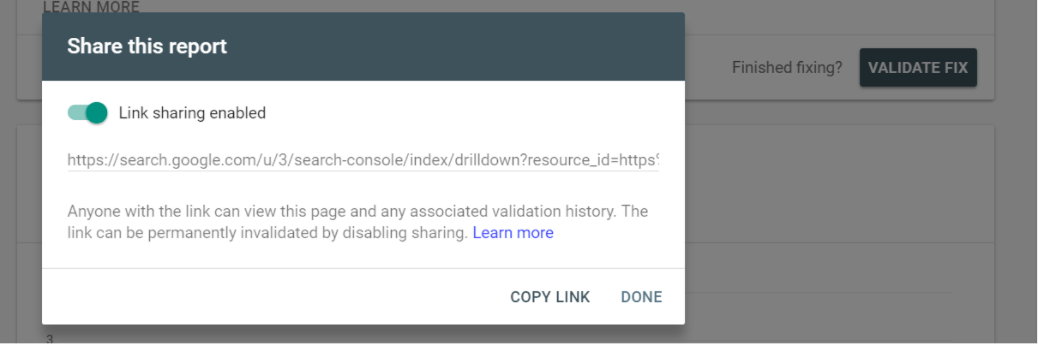

Report Sharing Feature

One final new feature in the Index coverage and AMP reports is link sharing. This allows you to share specific reports with anyone who has a Google login, even if they don’t have access to the Search Console property. This is a great way of sharing issues with developers.

What to Make of the Beta Version?

Overall, there are some very promising improvements that have been made in the new version of Search Console. The improved UI, extended date range for SERP data, better filtering and increasingly granular error reporting are all exciting and useful changes.

However, this is still very much a beta version and it is a bit surprising that Google has decided to roll out this new version at what appears to be an early stage. There is still work to be done to better explain the new reports, allow for more flexibility with the Validate fix function and replicate some reports that aren’t yet available in the new version (like the Search Appearance reports).

As it stands only half of the features in the old version are present in the new one and the new version doesn’t yet include a much-requested list of all pages indexed in Google. Google has previously spoken about improving the Fetch and Render tool, so maybe this and other updates are in the pipeline.

But let’s see how this central SEO tool evolves over the coming months.

In the meantime, you can find out how to integrate Google Search Console data into your crawls with DeepCrawl with our handy guide.