Chapter 5: How to Test JavaScript Rendering for Your Site

Now that we know the main areas in which JavaScript can negatively impact websites in search, let’s explore some of the ways you can optimise your website and make sure it performs for both users and search engines.

Here are 5 key pieces of advice for optimising your JavaScript-powered website:

- Prioritise your most important content and resources.

- Provide options for clients that can’t render fully.

- Use clean URLs, links and markup.

- Reduce file transfer size.

- Enhance JavaScript performance.

Prioritise your most important content and resources

Sending JavaScript to the browser or search engine all at once in the initial request to be rendered client-side is a bad idea. This is a big ask even for users with higher-end devices and Google which has relatively sophisticated rendering capabilities.

Instead, focusing on the core content of the page, such as the above-the-fold, hero content and making sure that it is available first as a priority, is a much better strategy.

Prioritising the most important content on a page to load first can dramatically improve the user’s perception of load time.

Here are some solutions to look into for helping to prioritise key resources:

- Optimise the critical rendering path: Make sure that none of the most important resources on a page are being blocked and that they can render as soon as possible.

A critical resource is a resource that could block initial rendering of the page. The fewer of these resources, the less work for the browser, the CPU, and other resources.

- Utilise async and defer loading: Using the async and defer attributes allows the DOM to be constructed before being interrupted by the loading of scripts. Non-essential scripts are downloaded lazily in the background, keeping the critical rendering path unblocked.

Async scripts will download the script without blocking rendering the page and will execute it as soon as the script finishes downloading. You get no guarantee that scripts will run in any specific order, only that they will not stop the rest of the page from displaying. It is best to use async when the scripts in the page run independently from each other and depend on no other script on the page.

- Implement progressive enhancement: Focuses on the core content of a page first, then progressively adds more technically advanced features on top of that if the conditions of the client allow for this. This means that the main content is accessible to any browser or search engine requesting it, no matter what their rendering capabilities are.

Rather than copying bad examples from the history of native apps, where everything is delivered in one big lump, we should be doing a little with a little, then getting a little more and doing a little more, repeating until complete. Think about the things users are going to do when they first arrive, and deliver that. Especially consider those most-likely to arrive with empty caches.

Provide options for clients that can’t render fully

As we’ve discussed earlier in this guide, not every client will be able to render fully. This is why it’s important to make sure that your pages are still usable without needing to be able to run the latest JavaScript features.

This process is referred to as graceful degradation, meaning that the website or application will fall back to a less complex experience if the client is unable to handle some of the latest JavaScript features.

It’s always a good idea to have your site degrade gracefully. This will help users enjoy your content even if their browser doesn’t have compatible JavaScript implementations. It will also help visitors with JavaScript disabled or off, as well as search engines that can’t execute JavaScript yet.

–Google Webmaster Central Blog

Make sure your website works, caches and renders for the most basic browser, device or crawler, and has a baseline level of performance that works for everyone.

With regards to the discussion surrounding “JavaScript vs. SEO”, it should first be clearly stated that JavaScript is not “bad” per se. However, the use of JavaScript certainly increases the complexity in terms of search engines, as well as web performance optimisation.

Essentially, SEOs should ensure that there is a version of their website available that works without JavaScript. This could be achieved, for example, by using Puppeteer which is a Node library that can be used to control a headless Chrome browser. This browser could then be used to crawl either a JavaScript-heavy website or even a single page JavaScript application and as a result, generate pre-rendered content.

As it currently stands, choosing to rely heavily on JavaScript should be carefully considered – it’s not impossible to rank JavaScript-powered sites, nor is it impossible to make them fast, however, be aware that it adds a great deal of complexity on many different levels, all of which needs to be dealt with!

Graceful degradation doesn’t just benefit Google, it also helps other search engines like Bing that struggle with rendering.

The technology used on your website can sometimes prevent Bingbot from being able to find your content. Rich media (Flash, JavaScript, etc.) can lead to Bing not being able to crawl through navigation, or not see content embedded in a webpage. To avoid any issue, you should consider implementing a down-level experience which includes the same content elements and links as your rich version does. This will allow anyone (Bingbot) without rich media enabled to see and interact with your website.

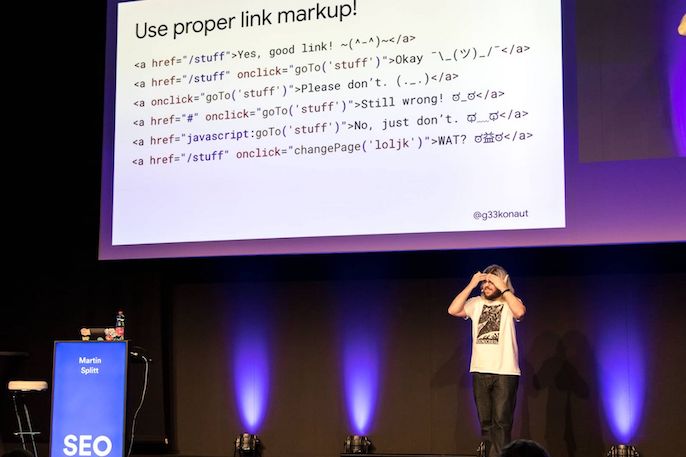

Use clean URLs, links and markup

Each page should have a unique, indexable URL which shows its own content. JavaScript can complicate this, however, as it can be used to dynamically alter or update the content and links on a page which can cause confusion for search engines.

Make sure your website is using only clean links with the <a href> attribute so that they are discoverable and clear for search engines to follow and pass on signals to. Using <a> tags for links also helps people browsing with assistive technologies, not just search engine crawlers.

Image source: sblum

This also applies to markup, so make sure key markup is structured clearly and displayed in the HTML where possible for search engines. This includes:

- Page titles

- Meta descriptions

- Canonical tags

- Robots meta tags

- Alt attributes

- Image source attributes

Check that these are in place using ‘Inspect’ in the browser.

Make sure URLs, links and markup aren’t served via JavaScript and that they’re clearly accessible in the HTML, so search engines can find and process them.

Here’s what Google’s Martin Splitt recommends for optimising web apps for search engines and users:

1. Use good URLs: URLs should be reliable, user-friendly and clean.

2. Use meaningful markup: Use clean links and clear page title, meta description and canonical tags.

3. Use the right HTTP status codes: Avoid soft 404s so Google can serve the right pages.

4. Use structured data: This will help search engines better understand your content.

5. Know the tools: Test your content with the Mobile-friendly Test, Structured Data Testing Tool and Google Search Console.

Reduce file transfer size

Reducing the file size of a page and its resources where possible will improve the speed with which it can be rendered. You can do this by making sure that only the necessary JavaScript code is being shipped.

Some ways of reducing file size are:

- Minification: Removing redundant code that has no function, such as whitespace or code comments.

- Compression: Use GZIP or zlib to compress text-based resources. Remember that even if something is initially compressed, it will still have to be unzipped and downloaded.

- Code splitting: This involves dividing long, expensive tasks into smaller ones so the main thread doesn’t become blocked and is still able to react to user interactions. This increases the perceived speed of a page for users.

To stay fast, only load JavaScript needed for the current page. Prioritize what a user will need and lazy-load the rest with code-splitting. This gives you the best chance at loading and getting interactive fast.

Learn how to audit and trim your JavaScript bundles. There’s a high chance you’re shipping full-libraries when you only need a fraction, polyfills for browsers that don’t need them, or duplicate code.

Ship less code with a smaller file size so there is less for browsers and search engines to have to download and process.

Enhance JavaScript performance

JavaScript doesn’t have to dramatically decrease site speed; there are things you can do to optimise the performance of the JavaScript on your website. Enhancing JavaScript performance should be a focus topic for developers and SEOs alike going forwards. Here are some methods to potentially implement on your site:

- Cache resources: This is especially helpful for users making repeat visits and server-side rendered or dynamically rendered content, as too many pre-render requests at once can slow down your server.

- Preload resources: This allows you to tell the browser which scripts and resources are the most important and should be loaded first as a priority.

- Prefetch links: This allows the browser to proactively fetch links and documents that a user is likely to visit soon within their journey, and store them in its cache. This means that the resource will load very quickly once a user goes to access it.

- Implement script streaming: This can be used to parse scripts in the background on a different thread to prevent the main thread from being blocked.

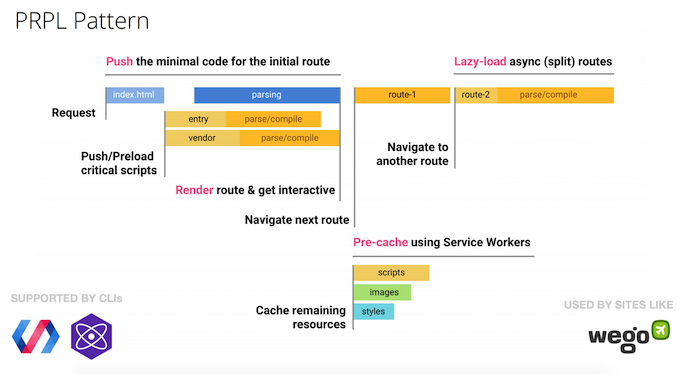

- Use the PRPL pattern: This method is an acronym for the different elements that its process is made up of: Push, Render, Pre-cache and Lazy-load. The PRPL pattern is recommended for improving performance and load times on mobile devices especially.

Source: Google Web Fundamentals

Use methods such as caching, prefetching and preloading to make any necessary JavaScript code more performant and load faster.

In conclusion

SEOs and developers need to learn to work better together to get their websites discovered, crawled and indexed by search engines, and, therefore, seen by more users. Both departments have a lot of influence over the different aspects of this process and we share common goals of serving optimised websites that perform well for both search engine bots and humans.

Source: Martin Splitt, FrontEnd Connect

JavaScript rendering and page loading can be an expensive, time-consuming process, but it can be tailored to either the user or search engine to help them achieve their main goals. For users, you can prioritise what they need immediately as they’re browsing to load first and increase perceived site speed. For search engines, you can provide alternative rendering methods such as server-side rendering to make sure they can access your core content despite their limited rendering capabilities.

The landscape of JavaScript is constantly evolving and we all need to do our part to keep up and learn more about it, for the sake of our websites’ health and performance.

SEOs need to stay ahead of the curve when it comes to JavaScript. This means a lot of research, experimentation, crunching data, etc. It also means being ready for the next big thing that probably dropped yesterday and you now need to adapt to, whether it’s Google finally updating its rendering browser or one of the countless JavaScript frameworks suddenly becoming popular among developers.

The best way to make sure your JavaScript-powered website or application performs as it should, is to conduct regular JavaScript audits looking at the key issues laid out in this guide. Keep crawling, monitoring and testing your website for rendering speed, blocked scripts and content differences between the unrendered and rendered content. This way you can keep on top of any issues that are putting a roadblock between users and search engines, and your website’s content.