Are you planning a major change to your current website, such as a relaunch, redesign or migration?

There are a few things you should be aware of that are often not considered by designers and web developers, but might cause Google to re-evaluate your website rankings and cause a drastic drop in your rankings.

Before we get into that, let’s look at some of the common reasons for these major changes.

Potential objectives of a website relaunch include:

- increased sales & traffic,

- improved engagement,

- better conversion rates,

- enhanced usability / better UX, and

- reduced support and maintenance costs.

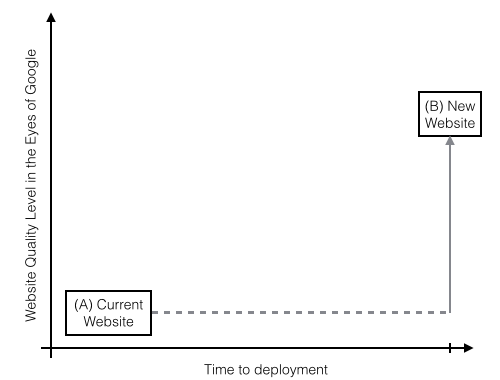

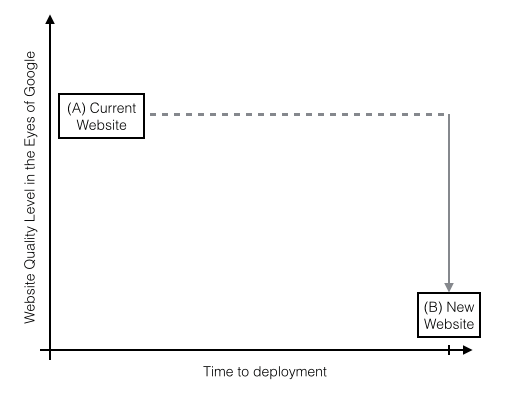

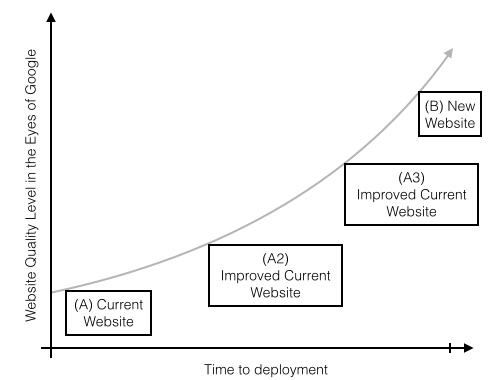

While some traffic channels like paid search are easily redirected from the old to the new website, organic traffic is subject to Google’s evaluation. Depending on the amount of changes, Google will take its time to consider whether or not the new website is an improvement. Take the Google Bots perspective for a moment — it is completely unaware of the months of planning and work on the new project. When you relaunch, it is presented with a different website without any prior notice. Depending on how many things actually change, especially on a structural level, the bot needs to understand everything anew and will either rate your new website to be better or inferior to your current website.

Caption: Scenario 1: How Google sees a relaunch of a better version, without any gradual improvements.

Caption: Scenario 2: How Google sees a relaunch of an inferior version.

Why Sudden Changes should be Avoided

If the new website is a significantly different project, i.e. with a new URL structure, a new architecture caused by dramatic changes of internal linking and rewritten content, it’s possible that you will lose the rankings of your most important keywords for a few weeks or even months.

Caption: Visibility of a major retail company’s web site after a relaunch in late 2013 in a competitive field. This is not caused by a penalty.

Know the business value of your organic traffic.

Do you know how significant organic traffic is to your business’s bottom line? Assessing the value related to a possible nose dive in dollars is vital to put things into perspective.

Gradual Improvements for a smooth Transition:

Instead of just “switching” from one version of your website to another, a better approach is to introduce gradual improvements to your current website. This way, you can move iteratively and safely towards the new version of your site:

Caption: How Google sees a relaunch with gradual improvements.

Following this approach does require additional development resource, yet it gives Google time to digest and evaluate every single one of those changes. By following an iterative process, you, the website owner, will be able to understand not only if your new website will be able to deliver the intended results but it will also help you identify technical requirements your new website has to fulfil along the way.

Caption: Gradual improvements before launching the new web site

If organic rankings are important to your business, it is therefore important to allocate budget to improving your existing site before launching a new one.

The following framework will allow you to mitigate any risk and increase the chances of launching a website that keeps its ranking or performs even better in organic search.

Considering a relaunch? Work your way up to it. Consider these points as soon as possible.

The best approach is to follow this sequence:

- Fix what is broken first

- Keep what’s working

This way, you can uncover and remove barriers that limit your current website and will make use of the time available to feed digestible chunks to Google. You will understand which specific pages on your current website are performing well in search and how your website architecture supports those rankings. Lumar (formerly Deepcrawl) allows you to combine Google Analytics Data with your crawl data to identify those pages easily. This knowledge can be used to direct the design and development process for the new website. You can also improve the internal page rank of selected pages on the current site. It also means you have visibility over what’s happening rather than blindly pushing the new site live.

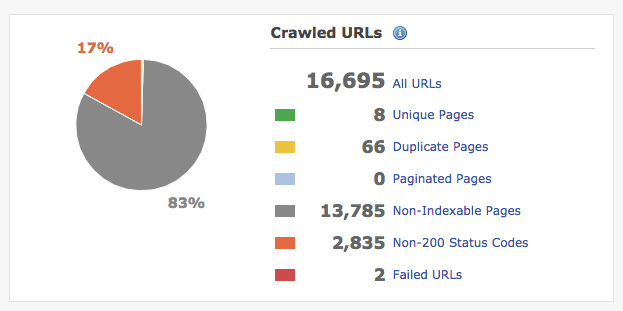

1. Fix what is broken

This part is pretty straightforward. You start by crawling the site to see if there are structural issues. While this seems rather obvious, it is often missed by website owners or development agencies.

Here is a crawl report of a leading ecommerce site that is currently working on a relaunch. It is clear that with a few adjustments, such as fixing their site-wide canonical issues and improving crawlability and indexation, would almost certainly be rewarded with better Google rankings.

Every project can benefit from a quick check to address some of the technical challenges. Strategic considerations are probably not needed at this point, which in turn allow for faster decision-making to start improving the site.

2. Keep what is working

Maintaining existing rankings should be a minimum requirement for pretty much any website relaunch. Therefore you need to understand what is actually working, why it works and how you can transfer the good parts to the new website with the available technology.

“Everything new” is not a great idea in that case. While the visual design can be changed, the flow of internal link equity should not change in a way that reduces internal pagerank of the URLsassessed in step one.

If you plan to migrate to a new CMS or ecommerce system, this decision should be based on deeply understanding its strengths and weaknesses from a variety of perspectives. If you do not have 100% control over how the content you enter in the backend is accessible and displayed on frontend, keeping what is working can be hard in those cases. Some systems ship with huge duplicate content out of box and they are a challenge to get under control. The label “SEO optimized” is often nothing more but a marketing ploy and choosing a system without proper due diligence might send you straight into the fangs of Google Panda.

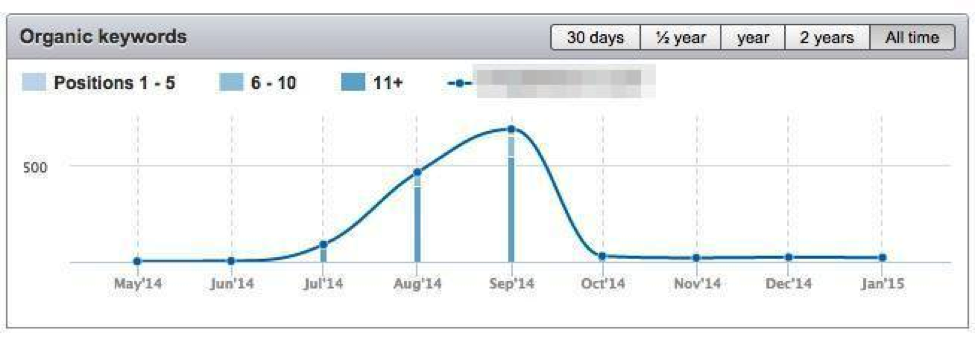

Here is an example of the rankings over time of a rather new, medium-sized ecommerce project. It performed well for organic traffic until Google rolled out Panda 2.0:

Caption: Number of organic keyword for website with Panda 2.0 penalty in September 2014.

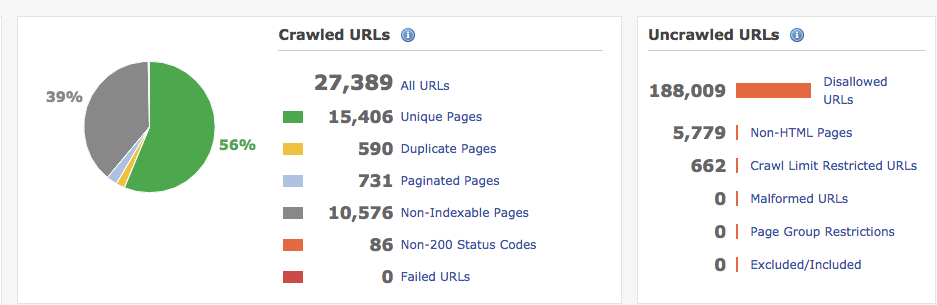

It has about 1,200 unique products, 50 content pages and 330 categories. With a proper on-page setup for this new site, the crawler should identify slightly more than 1,500 unique pages. However, Lumar (formerly Deepcrawl) found over 27,000 pages and an additional 188,000 blocked URLs. The reasons for this massive, and probably unwanted, creation of low quality pages are to be found in the ecommerce platform itself as well as on the development side. While the latter could be fixed, the platform is not for you to change. You’re locked in. So, again, choose wisely when switching platforms and be very careful about the technical implementation of functionalities that can create a lot of duplicate content.

Caption: Report from the aforementioned site using Lumar with excessive search filters and mega menus causing huge volumes of URLs, which are deemed duplicate content by Google and dilute link juice from your key pages.

How to use Lumar as part of the iterative process to your website relaunch

If you have read this far, you might want to know how you can measure and monitor continuous deployments… Lumar is really one of the best tools to help you keep on top of this. Its ability to compare different crawls (or states of your website) as well as the ability to run crawls in a test environment is extremely useful for controlling and evaluating continuous work on the website.

So this is how you can use Lumar in your website redevelopment process:

1. Crawl your current live website

The first step is to crawl your live (current) website so you can identify what is broken and what needs to be fixed. This gives you a benchmark on where you are and what to improve.

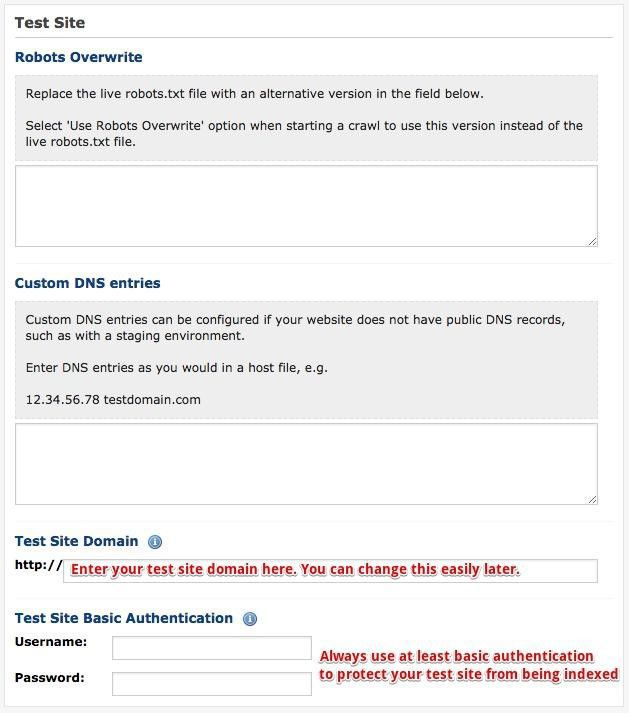

2. Setup and crawl your test environment for the current project.

Now you can work on the “fix what’s broken” part, and evaluate them.

3. Setup and crawl your test environment for your new project.

With Lumar, you can do this in the same project, so you can compare the crawl of the test environment with the crawl of the current live environment. The big upside is that you will be able to test different versions on test and live, both on the current and the new project.

Once this is set up, you can crawl the test environments after each development cycle to identify errors. You can check if the crawlability of a given site is maintained or improved before pushing the changes to the live site.

By following these steps, you’ll have complete control over the website relaunch or migration, which will reduce the risk of releasing an inferior version of your website, and allow you to successfully meet your objectives.

What challenges have you faced when working on a new site launch?

I’d be interested to hear about them.

Chris Dietrich is an online marketing consultant. He identifies and resolves technical issues that limit organic search traffic. You can reach him at https://chrisdietrich.net.

If you want to take Lumar for a test drive, you can sign up for a Lumar demo here.