Magento 2 is one of the most scalable and reliable CMS platforms available and is the choice of many of the largest eCommerce sites to grace the web. However, it’s also often one of the platforms most susceptible to uncontrolled crawling issues.

In this article, we dive into some of the most common crawling issues bloating your Magento 2 website and how to fix them.

1. Backend Magento 2 URLs

Magento 2 has a backend reference URL that is created for every category and product page. This in itself is not a problem, as these are generally canonicalized to their “clean” equivalent and they’re normally hidden from navigation from visiting customers.

For example:

Reference URL – https://www.example.com/catalog/product/view/id/19092/s/duvet-storage-bag-king/

Clean equivalent – https://www.example.com/duvet-storage-bag-king.html

Why does this matter?

There are a few issues that can crop up as a result of these backend URLs. Sometimes these are added to the frontend and become crawlable. This can often occur at scale when migrating onto Magento 2, but can also happen on smaller scales too.

This means that on a large eCommerce site you may be creating many more pages for search engines to crawl, as well as being reliant on canonical signals to pass internal authority to pages you want to rank.

How to fix it

To fix these URL errors you need to refresh the setup so Magento 2 reprocesses the URLs and uses the correct clean version, instead of the backend reference URLs.

This can be done by individually changing the URL and then re-saving the page, or in bulk by editing & re-saving the categories they’re assigned to. This serves to allow Magento 2 to refresh the URLs, causing them to be re-written as their clean versions.

2. Filters introducing thousands of crawlable URLs

Filters and facets can be a problem on websites regardless of CMS. But they seem to be particularly common on Magento 2 eCom sites. Perhaps this due to the flexibility of options available on Magento 2 compared to the more limited options offered by Shopify and other platforms.

Why does this matter?

Due to the huge number of potential parameter variations facets can add – with each one considered as a separate URL by search engines – facets can add thousands upon thousands of crawlable URLs even on a relatively small eCommerce site.

Although search engines are increasingly better at understanding parameters and filtering what is and isn’t useful, it’s still reliant on search engines making this decision. It also still allows search engines to crawl a huge number of URLs with little to no value.

In essence, we’re giving search engines a lot of unnecessary decisions to make and URLs to crawl.

How to fix it

Luckily, in Magento 2 it’s very easy to edit the robots.txt file, which allows us to stop search engines from crawling non-valuable parameter pages resulting from filters.

Just head to Content > Design > Configuration > Edit top global store > Search engine robots and disallow the correct URL strings or follow our guide on how to edit robots.txt in Magento 2.

3. No redirect rules in htaccess

Magento 2 sites also use htaccess rules to control which URLs resolve, these typically are www. vs non-www and trailing slash vs non-trailing slash URLs.

Why does this matter?

If you don’t have redirect rules in place, you may be opening up additional versions of your site to be crawled (for example the entire non-www. version of your site). This can result in a massive number of URLs being crawled, not to mention the potential issues if these pages’ indexability is not properly managed.

How to fix it

To ensure that the “base URL” is always redirected to, make sure that the “Always redirect to base URL” is selected in the following settings:

Stores > Configuration > General > Web> > Ensure “Always redirect to base URL” is selected.

For any other redirect rules we want to implement – Magento 2 devs are our saviors.

These should be a relatively easy fix, with rules being added to the htaccess files to ensure that any incorrect versions of the URLs 301 redirect to their equivalent pages we want to rank.

4. Product URLs existing in multiple subfolders

Similar to other eCommerce CMS, Magento 2 allows products to exist in multiple subfolders. This means that one product can exist in multiple categories, creating multiple URLs for each product.

Why does this matter?

Since only one URL can generally rank for any given keyword, this is creating several URLs for search engines to crawl, when only a single product URL can rank. This means less internal linking towards the product URL (relying instead on canonicals to pass the value), in addition to a large number of non-indexable URLs bloating the site.

For example:

Simple product URL – https://www.example.com/product-1

Category path product URL – https://www.example.com/category-1/subcategory-1/product-1; https://www.example.com/category-1/subcategory-2/product-1

How to fix it

This is an easy fix in the Magento 2 backend; category path URLs can be disabled under the following settings.

Stores > Settings > Configuration > Catalog > Search Engine Optimization > Use Categories Path for Product URLs > No

N.B. The argument can be made that subfolders for product URLs can be beneficial as it allows relevant keywords to be included in URLs. However, generally, we find this benefit to be negligible when compared with the large number of non-indexable URLs it opens up to be crawled and the additional work it places upon search engines to identify and rank the appropriate product URL.

5. Orphan Pages

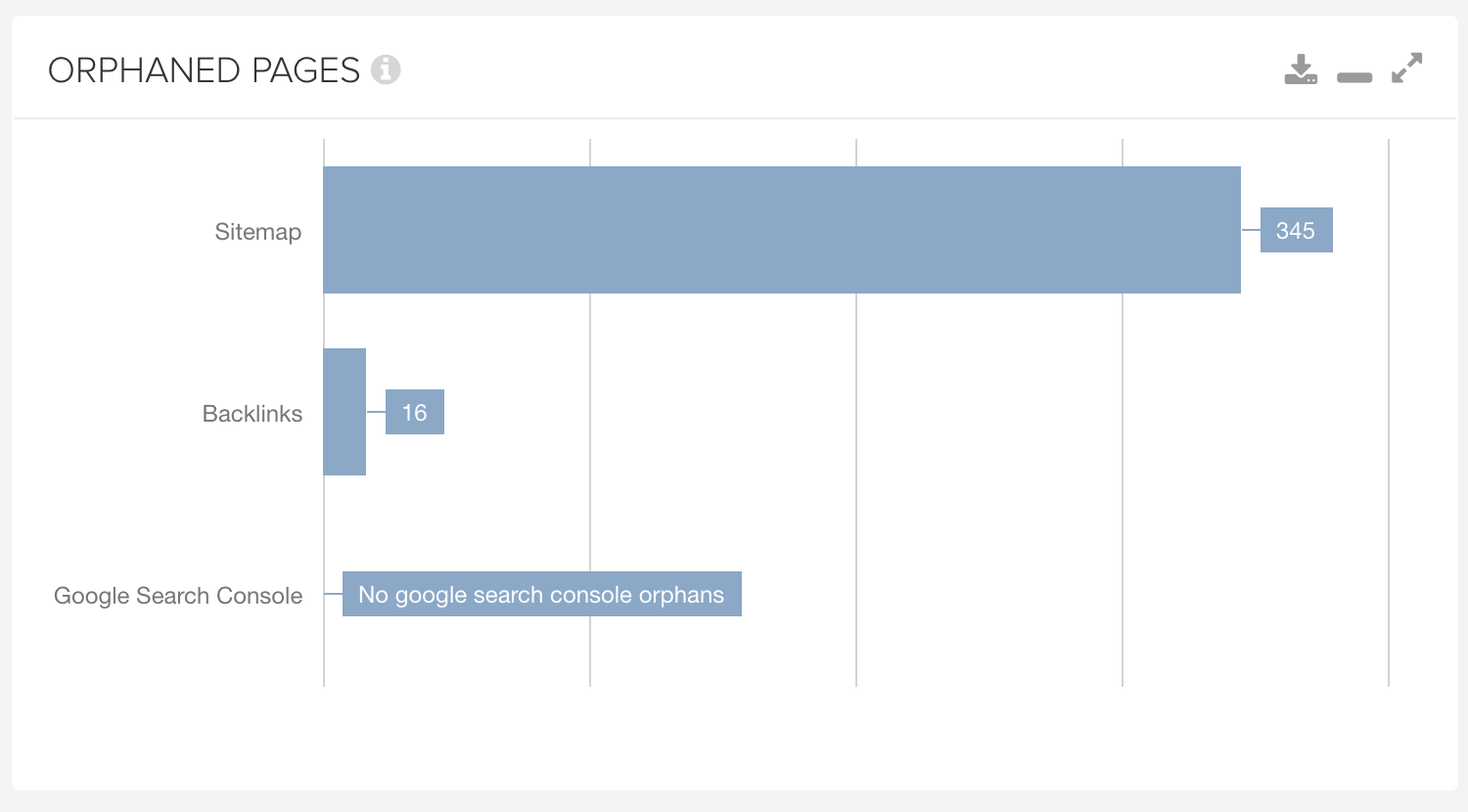

Another common issue we encounter on Magento 2 sites, is a large number of orphan pages (pages not linked to internally on the site). This may be due to the relatively large size of Magento 2 websites in general, however, it poses an issue for crawling these pages.

Why does this matter?

If pages are not internally linked to, they’re far less likely to be crawled by search engines, as search engine crawlers work by following internal links on a site. This is not to say they’re never going to be crawled, however, they’re far less likely to be.

How to fix it

The first step in fixing orphan URLs is to identify them. This can be done by crawling XML sitemaps, or using Google Analytics and Google Search Console to identify URLs being referenced, however, not internally linked to. These are then reported in Lumar’s Orphaned Pages report.

Once identified, you can decide which course of action you’d like to take with the orphaned pages. It may be that you no longer need them, in which case they can be redirected to a relevant page. However, if they’re important and you would like them to be in the best position possible to rank for targeted keywords, you will want to internally link to them on the site.

For Magento 2 sites with plenty of URLs, we often find HTML sitemaps for category pages is beneficial for ensuring that all main pages are internally linked. This can either be manually designed or implemented via a Magento 2 extension which has this as a feature such as Amasty or Mageworx.

How to identify crawling issues

As with most crawling issues – the best way to understand what issues your site is facing is by crawling the site yourself by using a technical SEO tool like Lumar.

For very large sites, which Magento 2 sites can tend to be, it may be useful to crawl a section of the site to limit the number of URLs. If your site has open facets that create hundreds upon hundreds of crawlable parameter pages – this can be a shrewd move. This can be done by using the Include function of Lumar to ensure only URLs that include a particular subfolder, or URL pattern, are crawled to give a sample of URLs to audit.

Alternatively, if you do want to identify crawling issues across your entire site, Lumar can do that too!